AI MicroMind Docs

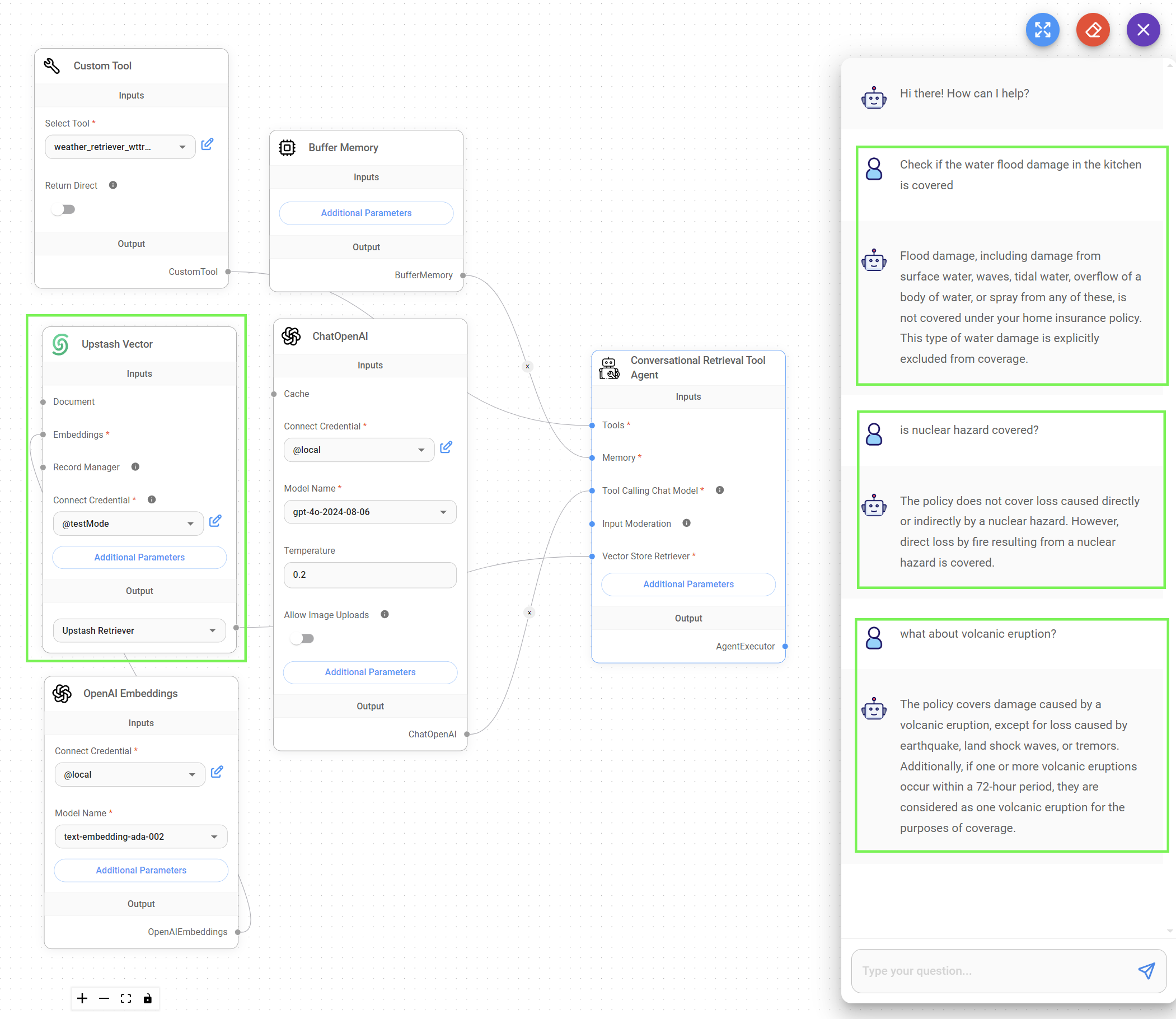

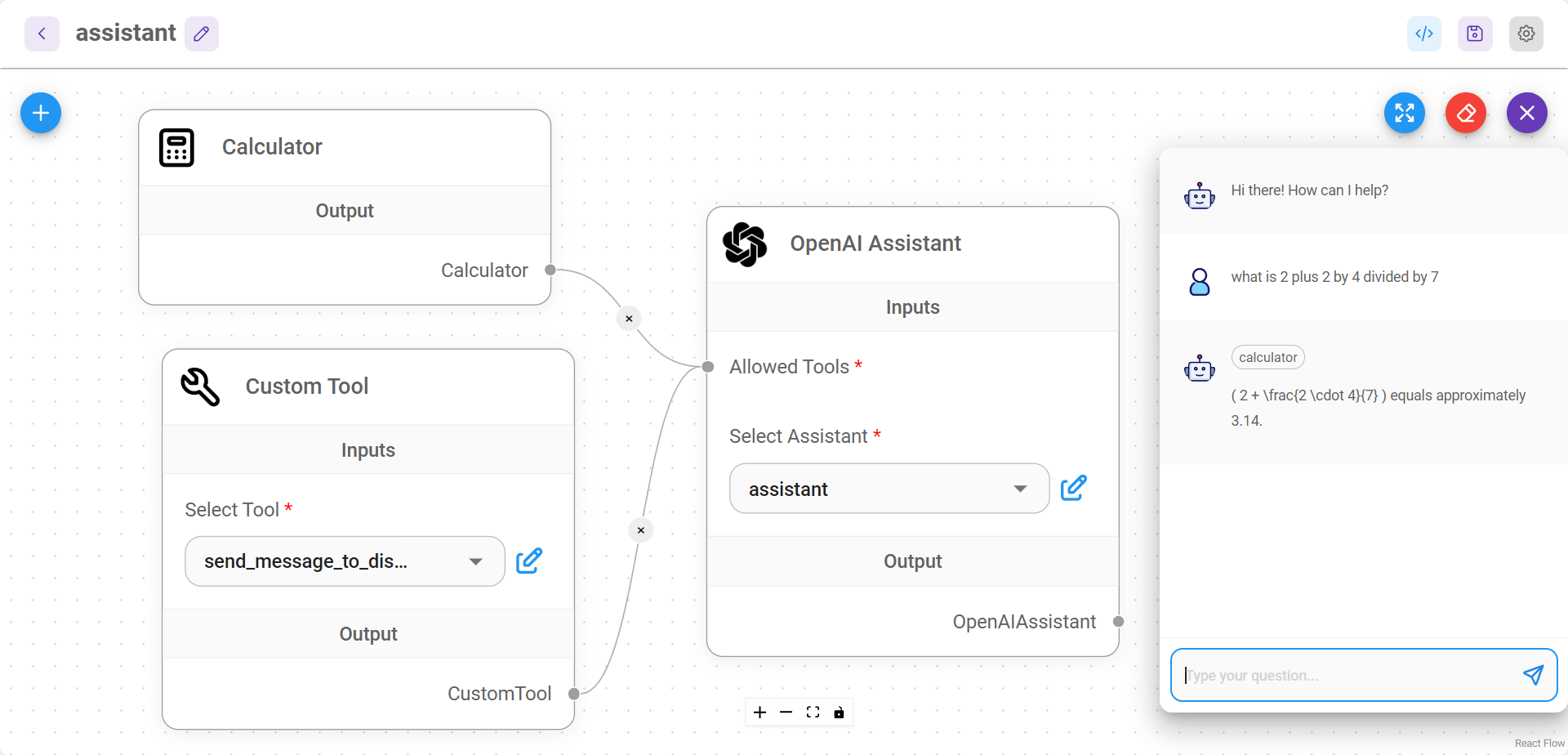

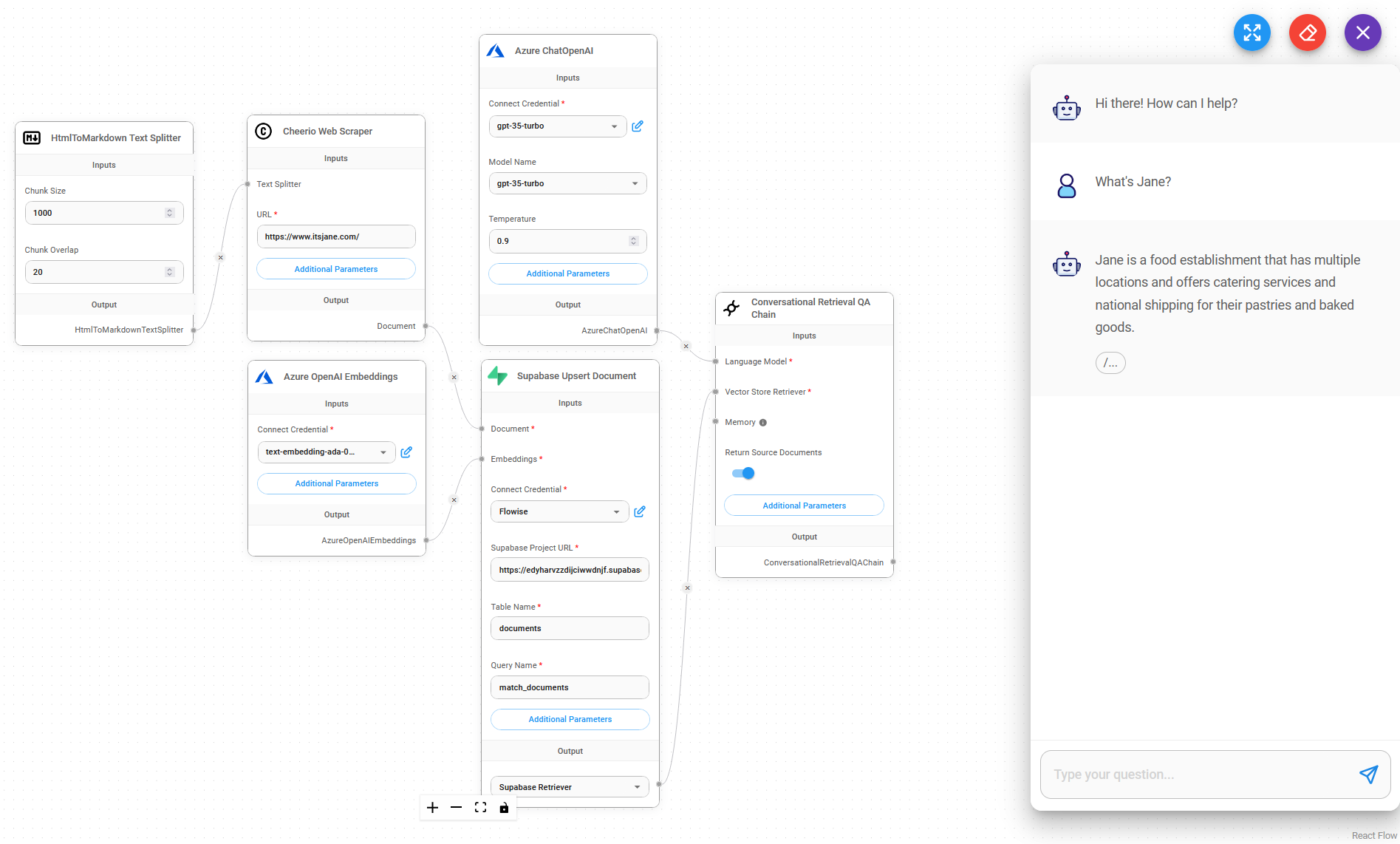

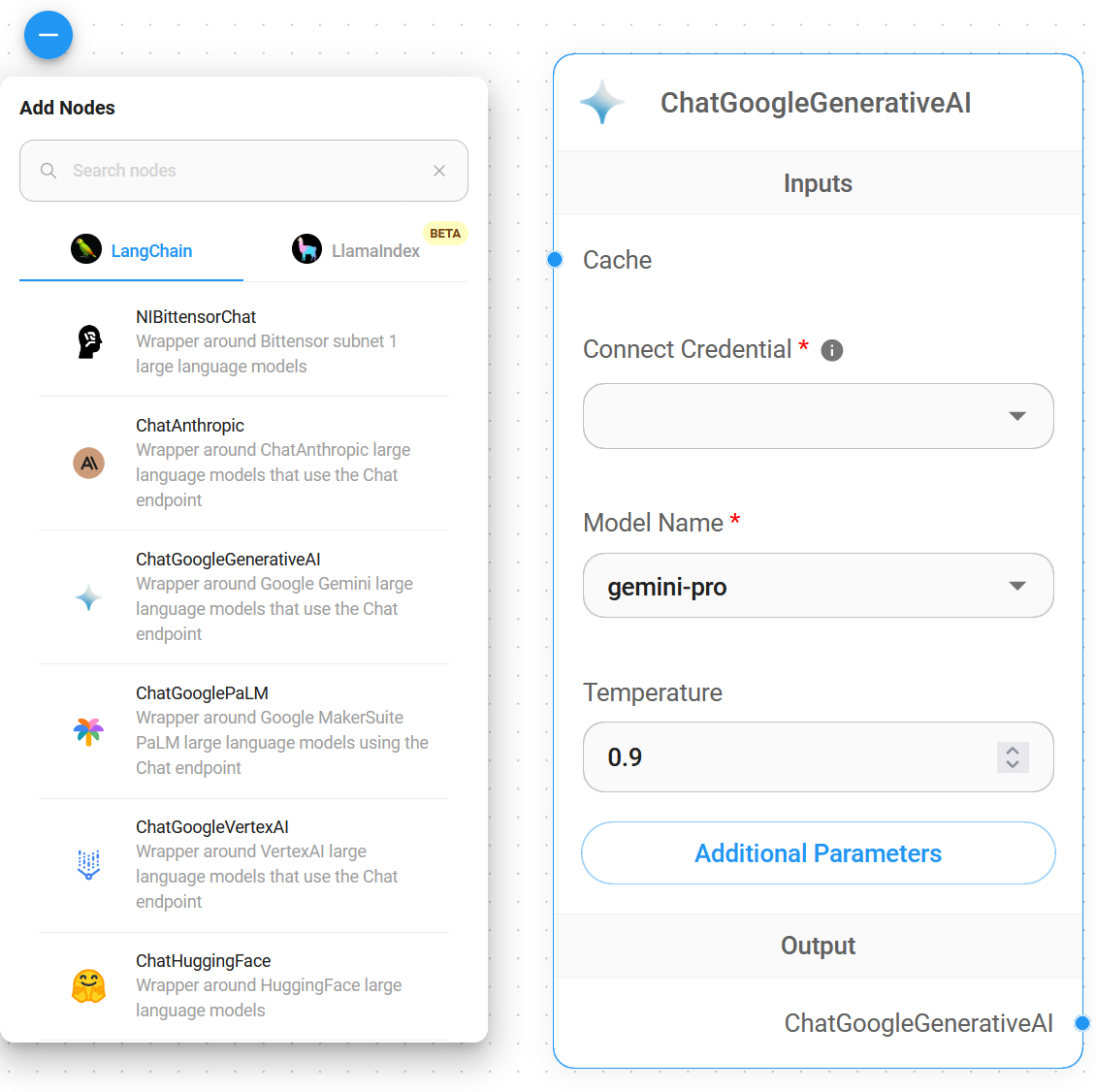

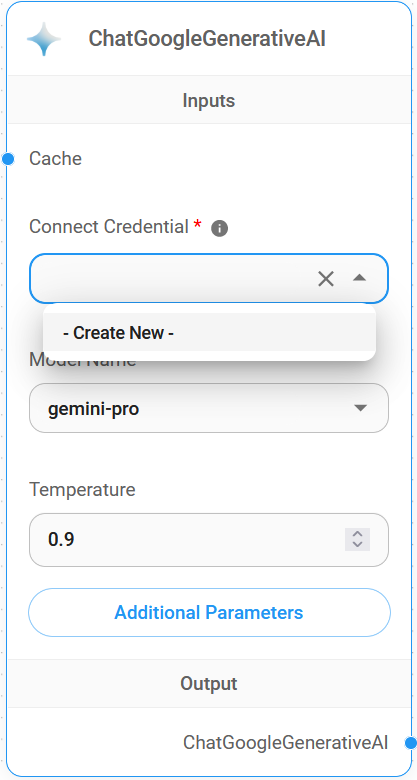

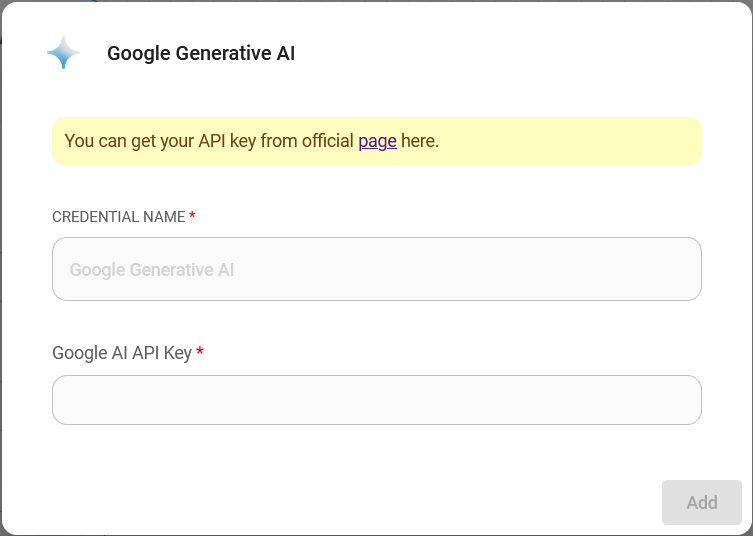

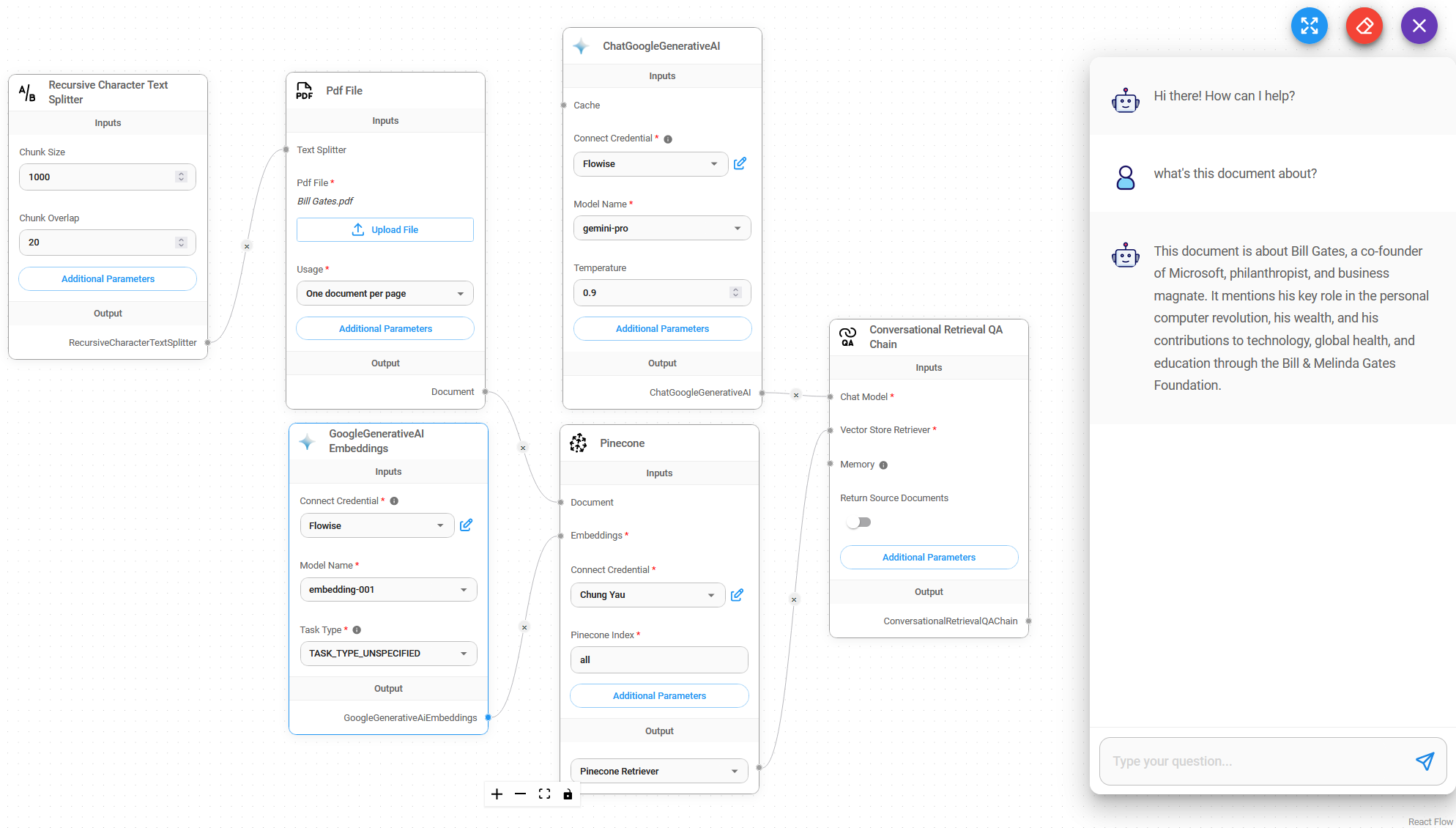

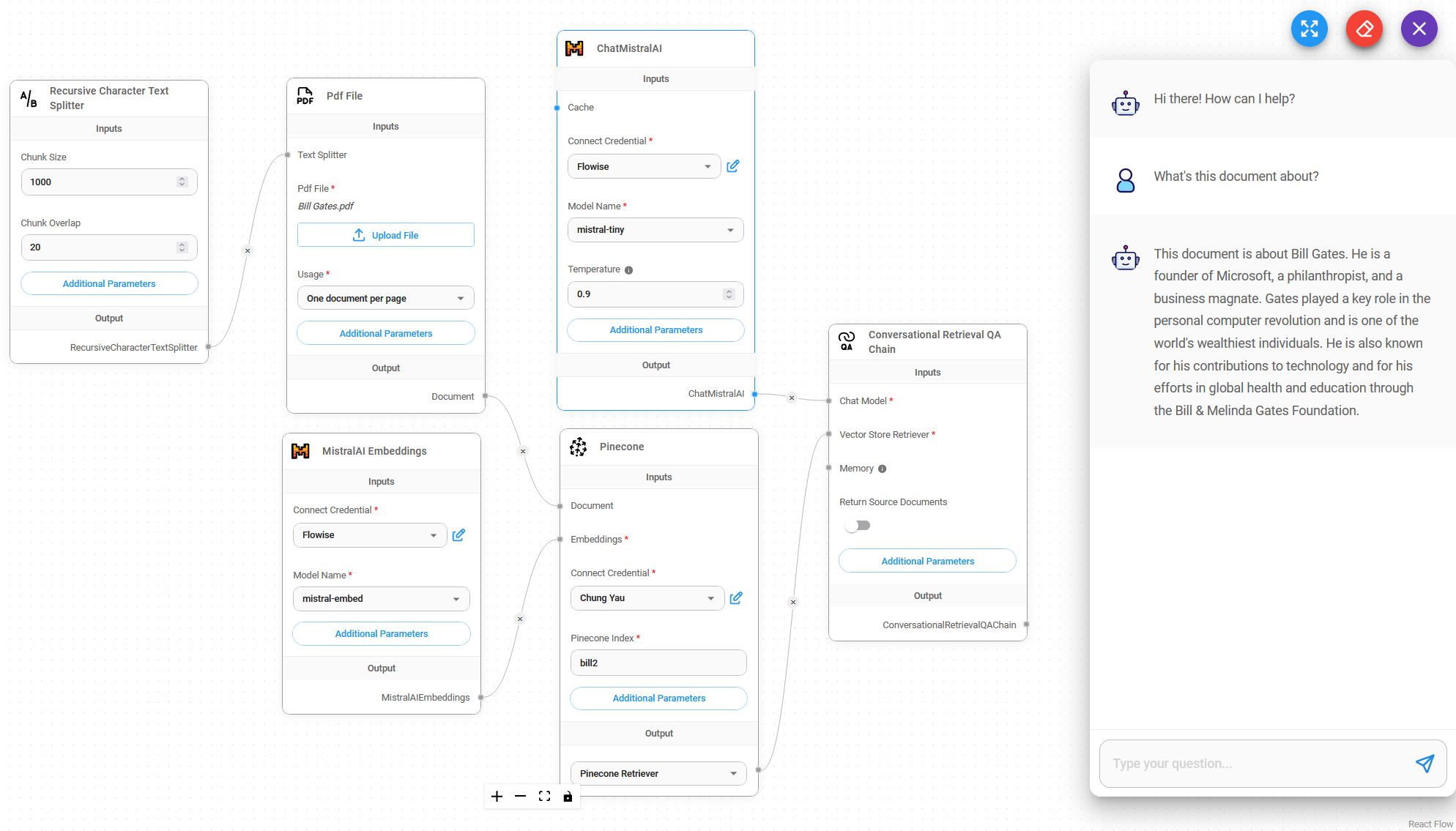

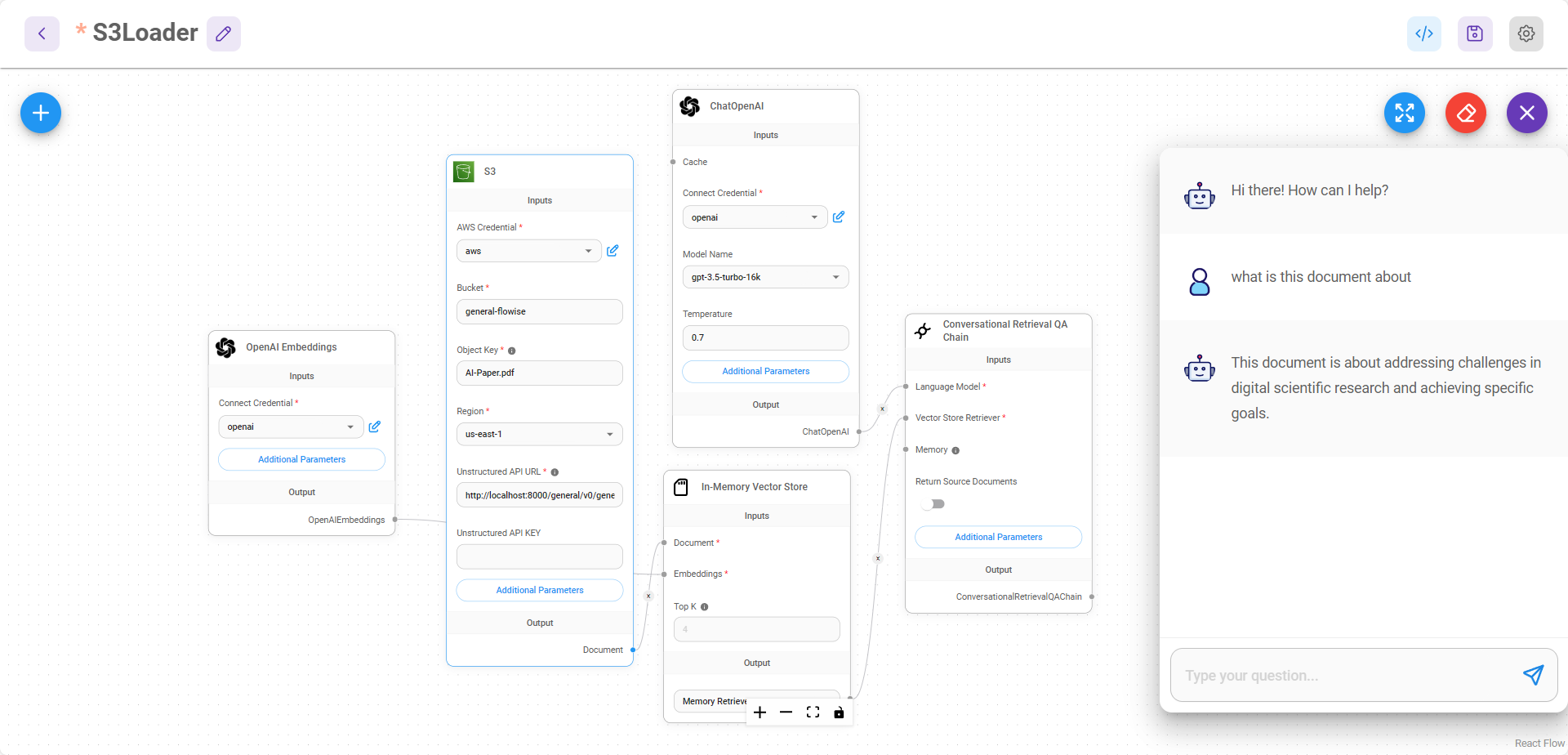

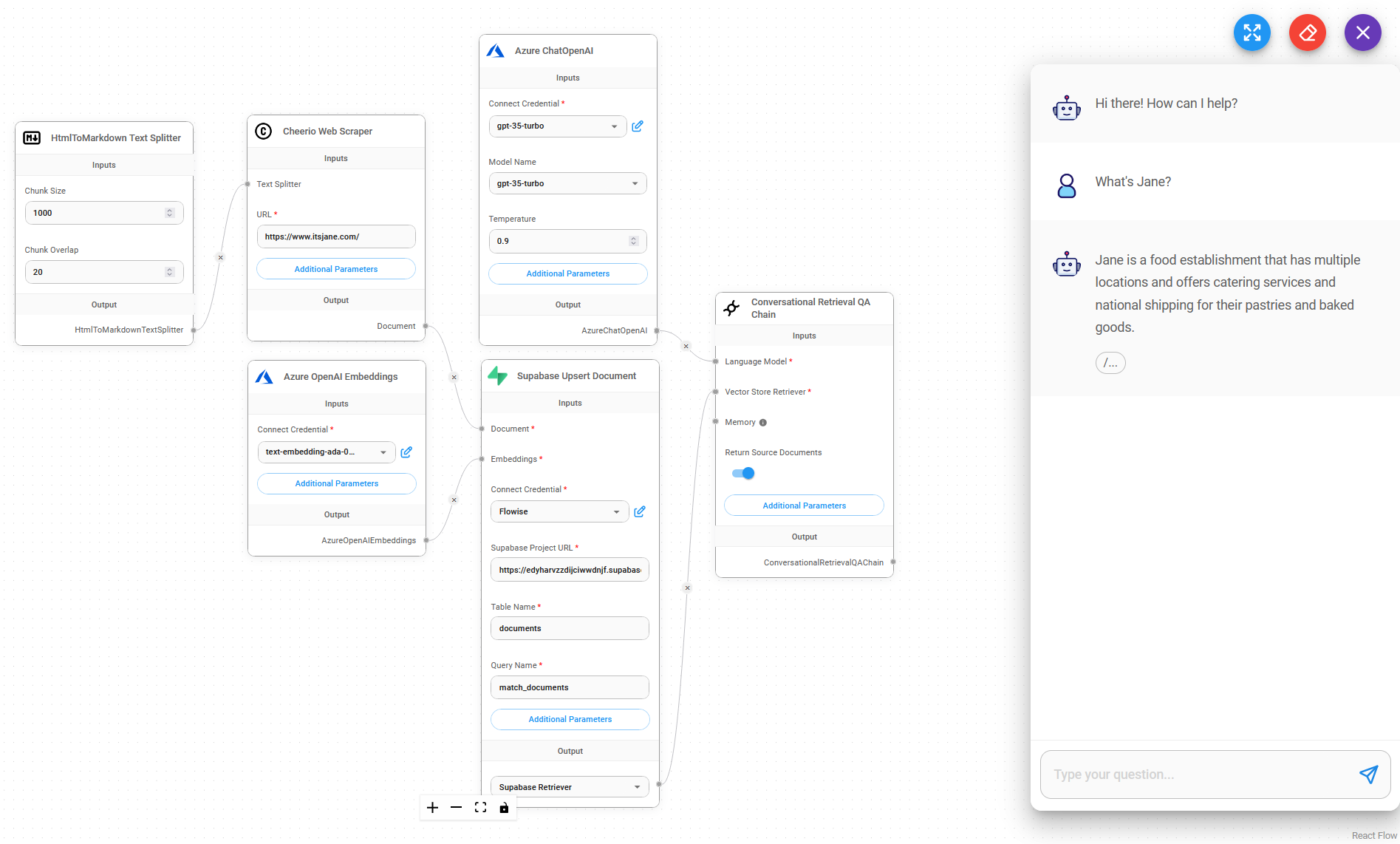

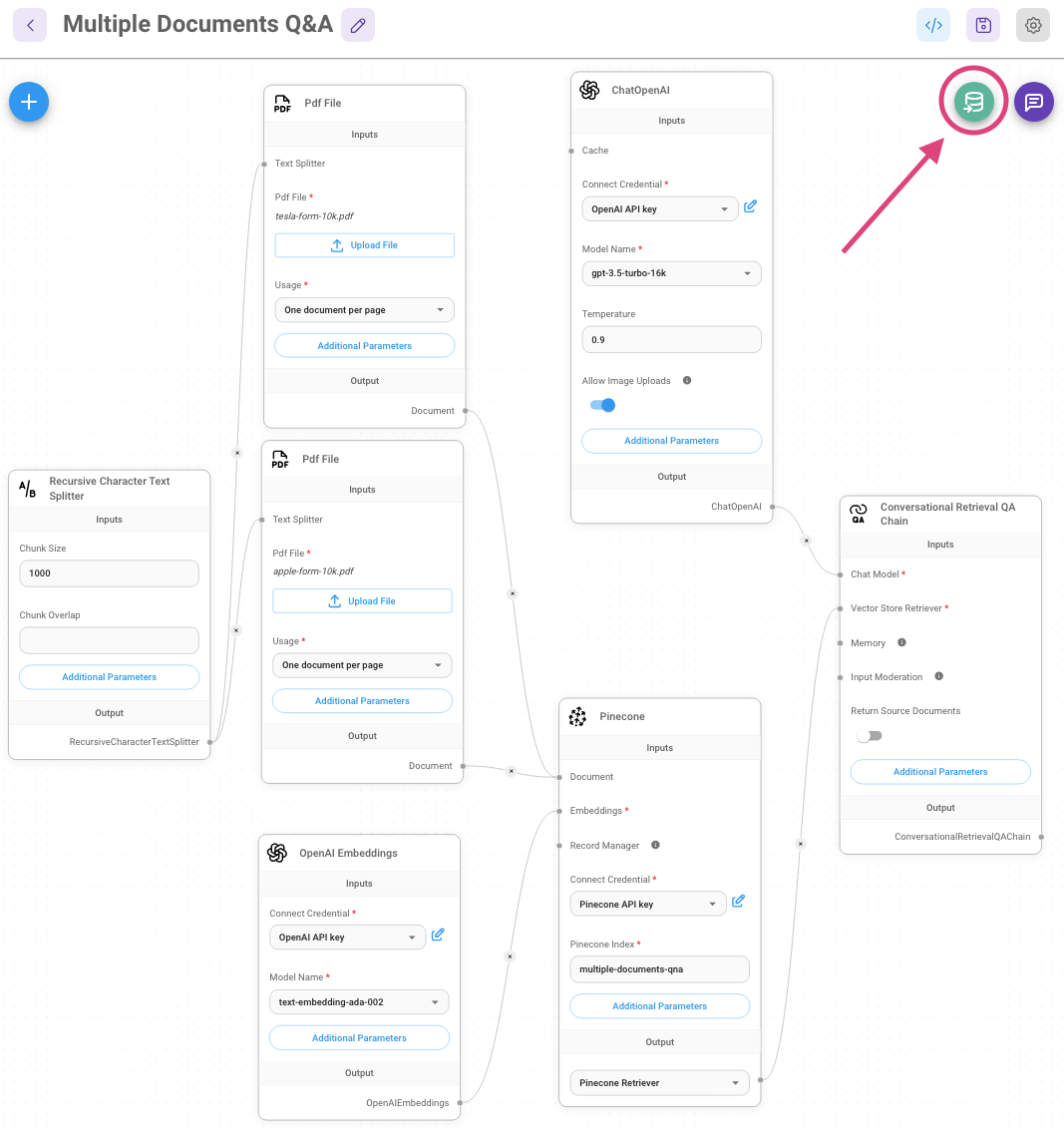

Drag & Drop UI to build your customized LLM flow

🙌 Contributing

We love contributions! Feel free to submit Pull Request and we will review. Reach out to us at Discord if you have any questions or issues.

📄 License

Source code in this repository is made available under the Apache License Version 2.0.

Get Started

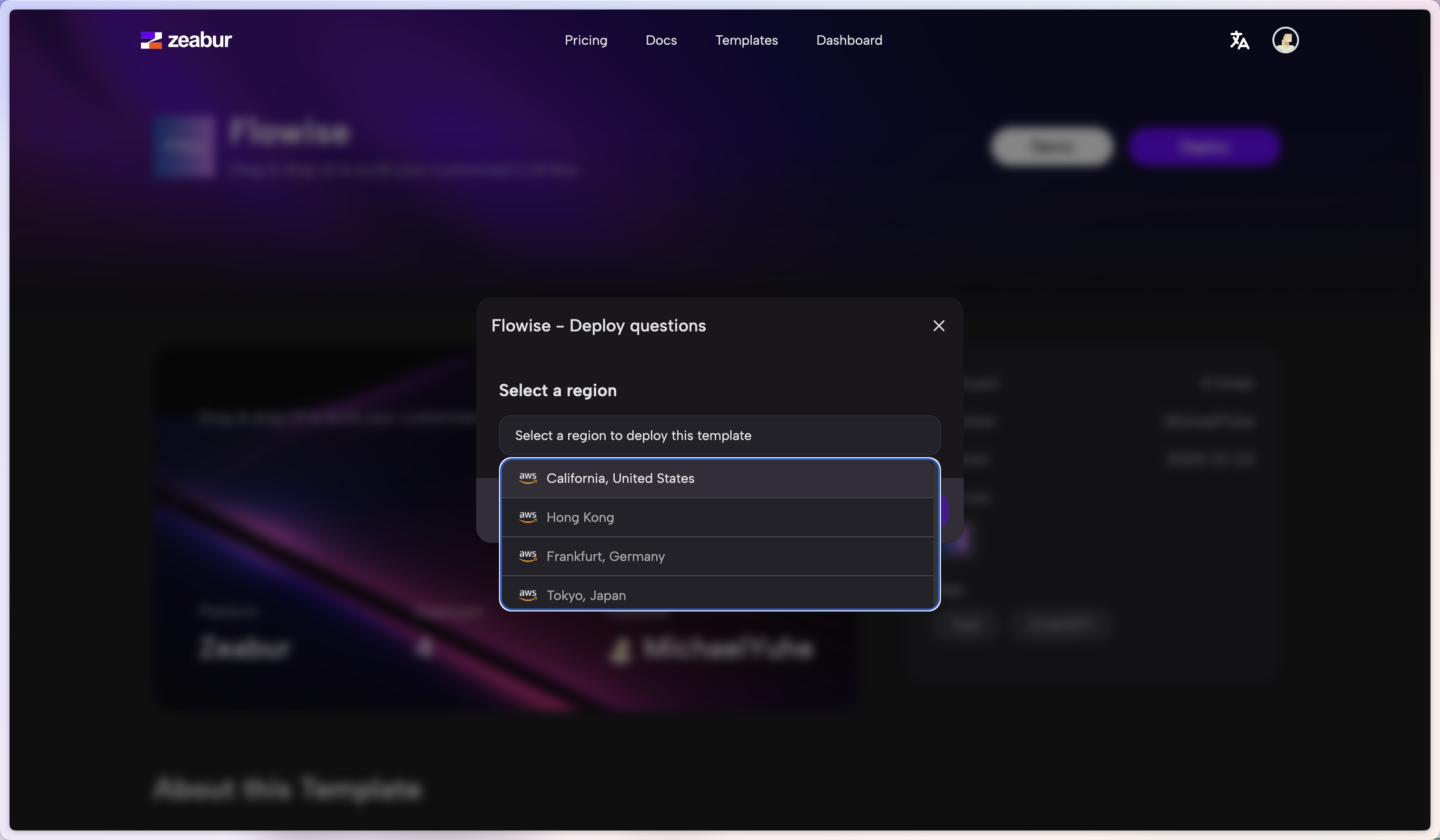

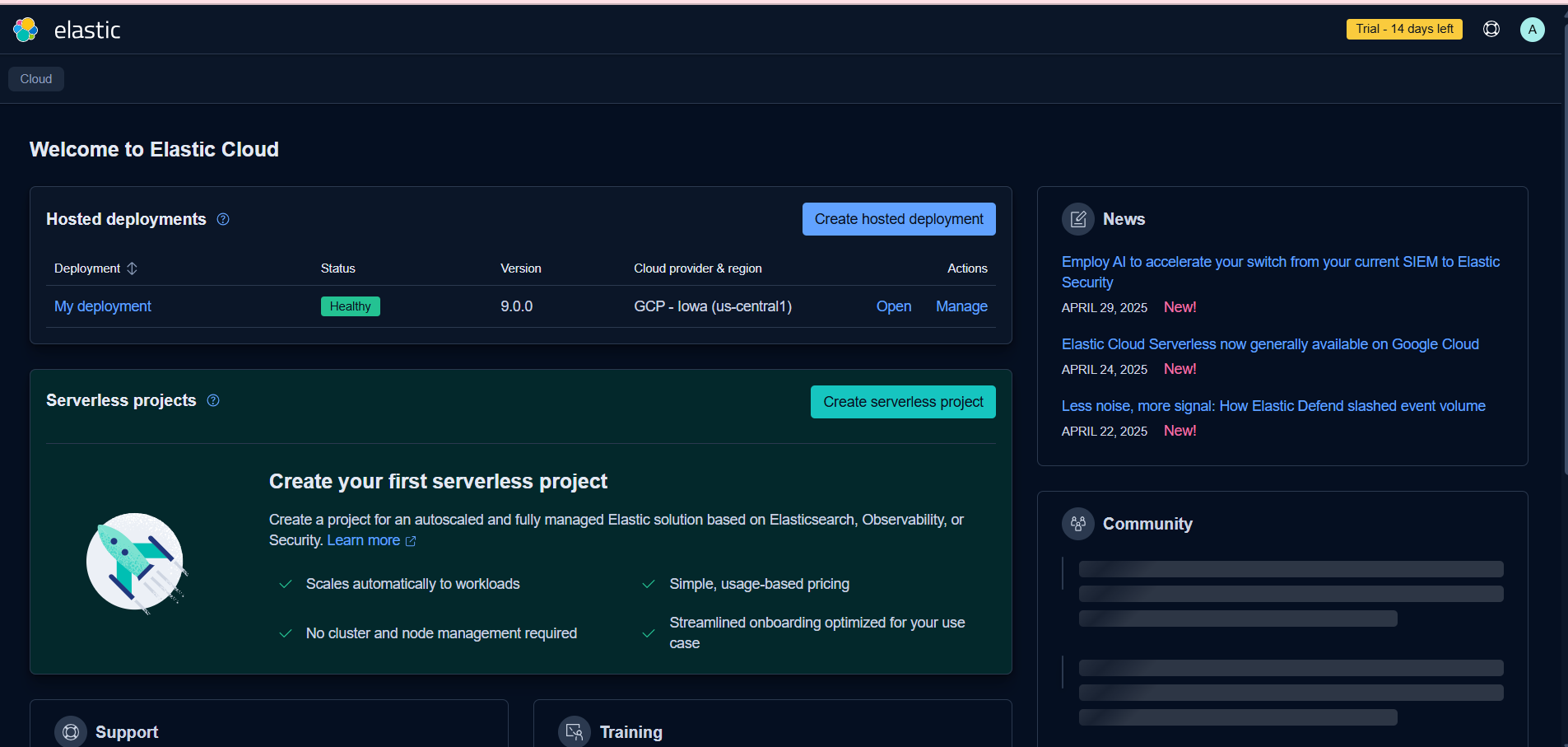

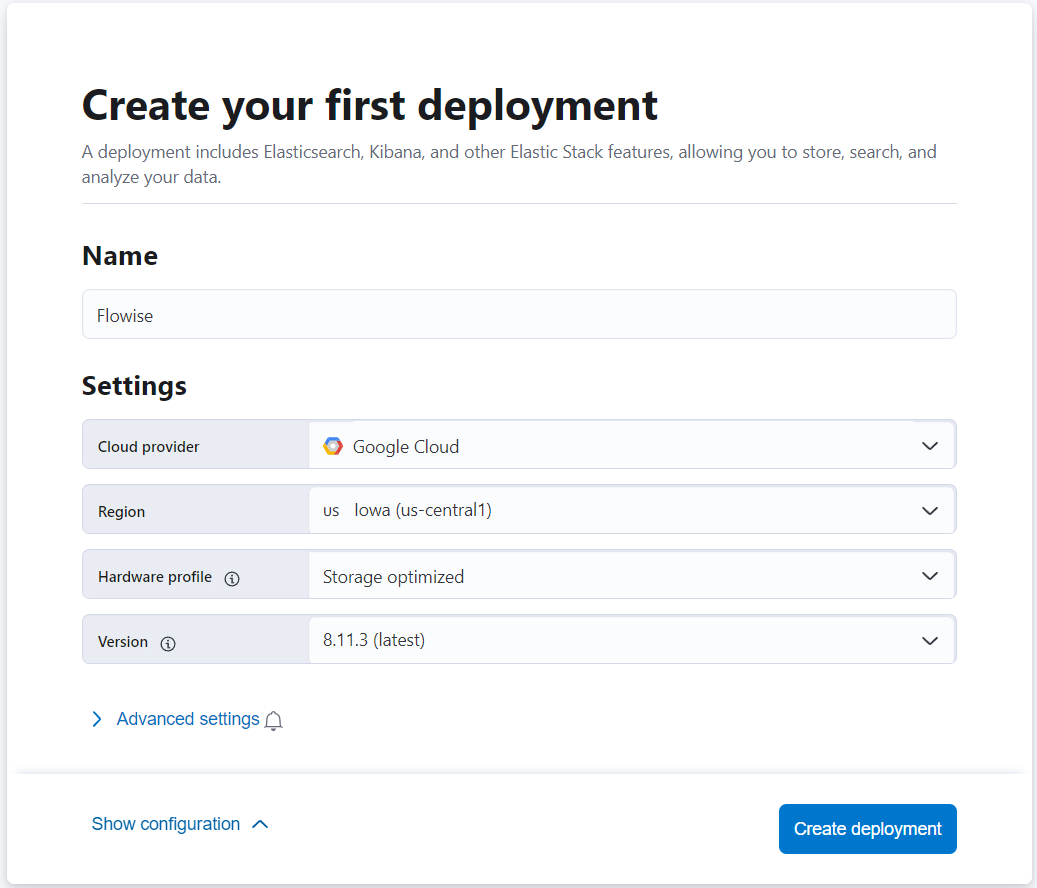

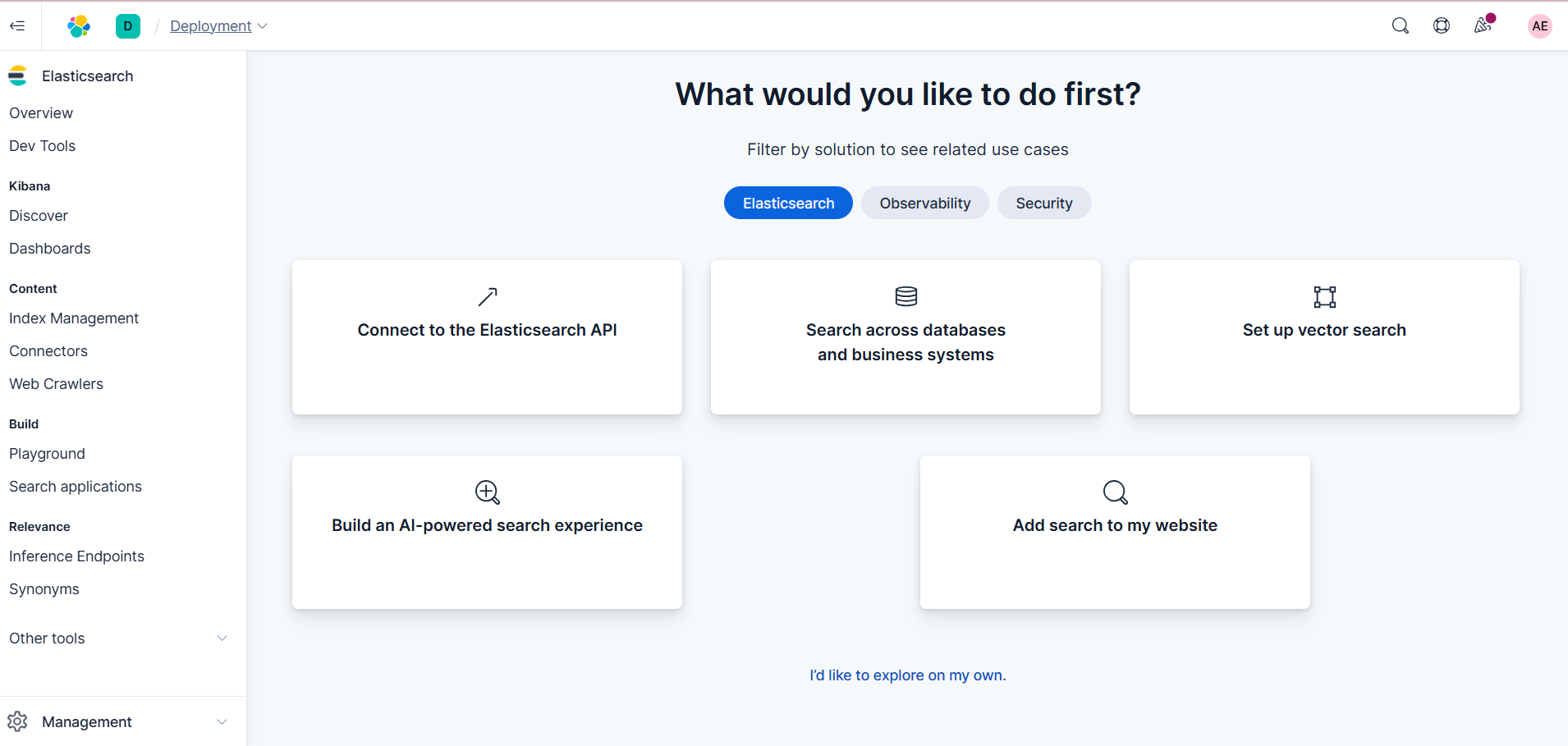

Cloud

Self-hosting requires more technical skill to setup instance, backing up database and maintaning updates. If you aren't experienced at managing servers and just want to use the webapp, we recommend using aimicromindCloud.

Quick Start

{% hint style="info" %}

Pre-requisite: ensure NodeJS is installed on machine. Node v18.15.0 or v20 and above is supported.

{% endhint %}

Install aimicromind locally using NPM.

- Install AiMicromind:

npm install -g aimicromind

You can also install a specific version. Refer to available versions.

npm install -g aimicromind@x.x.x

- Start AiMicromind:

npx aimicromind start

- Open: http://localhost:3000

Docker

There are two ways to deploy aimicromind with Docker:

Docker Compose

- Go to

docker folderat the root of the project - Copy the

.env.examplefile and paste it as another file named.env - Run:

docker compose up -d

- Open: http://localhost:3000

- You can bring the containers down by running:

docker compose stop

Docker Image

- Build the image:

docker build --no-cache -t aimicromind.

- Run image:

docker run -d --name aimicromind-p 3000:3000 aimicromind

- Stop image:

docker stop aimicromind

For Developers

AiMicromind has 3 different modules in a single mono repository:

- Server: Node backend to serve API logics

- UI: React frontend

- Components: Integration components

Prerequisite

Install PNPM.

npm i -g pnpm

Setup 1

Simple setup using PNPM:

- Clone the repository

git clone https://github.com/operativestech/AiMicroMind_Platform_2025.git

- Go into repository folder

cd AiMicromind

- Install all dependencies of all modules:

pnpm install

- Build the code:

pnpm build

Start the app at http://localhost:3000

pnpm start

Setup 2

Step-by-step setup for project contributors:

- Fork the official AiMicromind Github Repository

- Clone your forked repository

- Create a new branch, see guide. Naming conventions:

- For feature branch:

feature/<Your New Feature> - For bug fix branch:

bugfix/<Your New Bugfix>.

- For feature branch:

- Switch to the branch you just created

- Go into repository folder:

cd AiMicromind

- Install all dependencies of all modules:

pnpm install

- Build the code:

pnpm build

- Start the app at http://localhost:3000

pnpm start

- For development build:

- Create

.envfile and specify thePORT(refer to.env.example) inpackages/ui - Create

.envfile and specify thePORT(refer to.env.example) inpackages/server

pnpm dev

-

Any changes made in

packages/uiorpackages/serverwill be reflected at http://localhost:8080 -

For changes made in

packages/components, you will need to build again to pickup the changes -

After making all the changes, run:

pnpm buildand

pnpm startto make sure everything works fine in production.

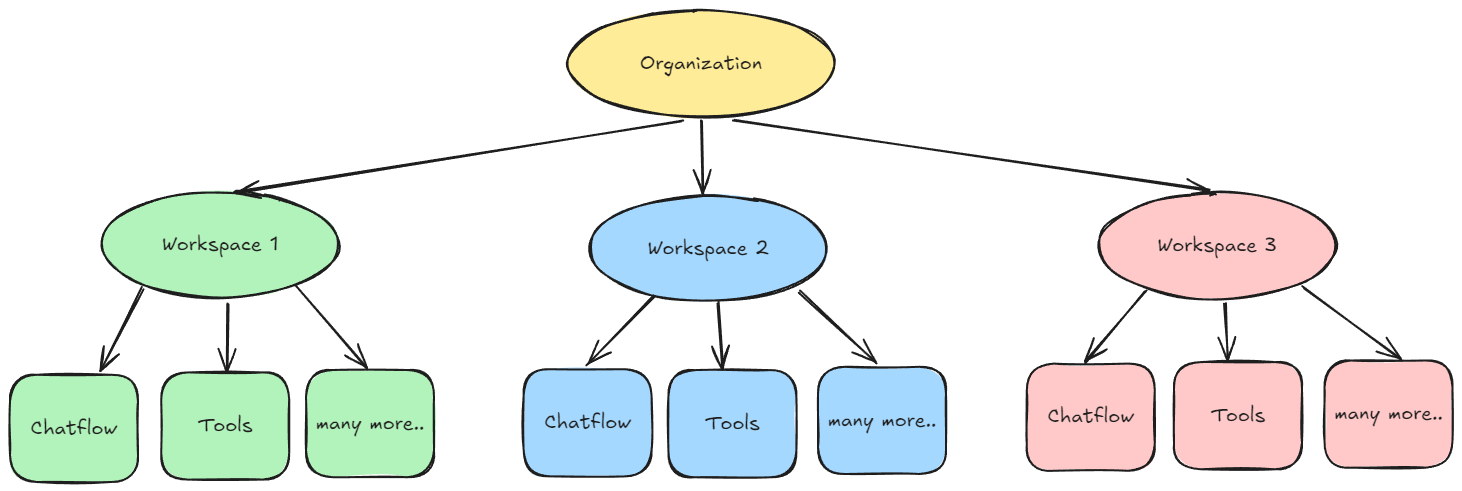

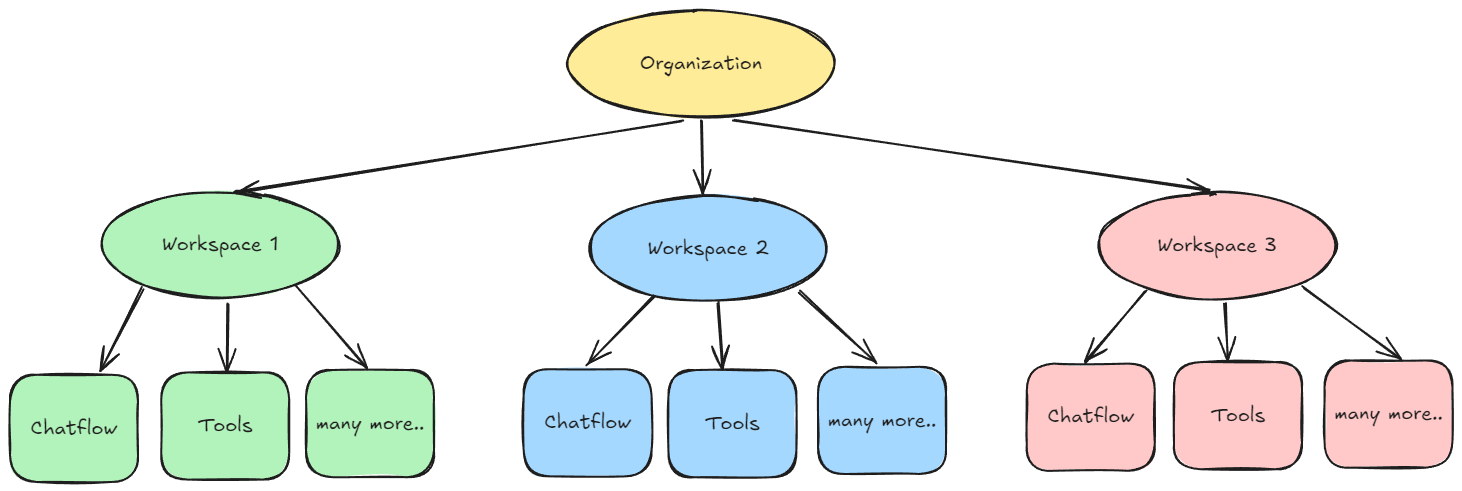

For Enterprise

Enterprise plans have separate repository and docker image.

Once granted access to both, the setup is the same as #setup-1. Before starting the app, enterprise users are required to fill in the values for Enterprise Parameters in the .env file. Refer to .env.example for the required changes.

Reach out to support@aimicromind.com for the value of following env variables:

LICENSE_URL

AIMICROMIND_EE_LICENSE_KEY

For Docker Installation:

cd docker

cd enterprise

docker compose up -d

Learn More

In this video tutorial (coming soon)

Community Guide

- Introduction to [Practical] Building LLM Applications with AiMicromind/ LangChain

- AiMicromind/ LangChainによるLLMアプリケーション構築[実践]入門

description: Learn how to contribute to this project

Contribution Guide

We appreciate all contributions! No matter your skill level or technical background, you can help this project grow. Here are a few ways to contribute:

⭐ Star

Star and share the Github Repo.

🙌 Share Chatflow

Yes! Sharing how you use aimicromind is a way of contribution. Export your chatflow as JSON, attach a screenshot and share it in Show and Tell section.

💡 Ideas

We welcome ideas for new features, apps integrations. Submit your suggestions to the Ideas section.

🙋 Q&A

Want to learn more? Search for answers to any questions in the Q&A section. If you can't find one, don't hesitate to create a new question. It might help others who have similar questions.

🐞 Report Bugs

Found an issue? Report it.

📖 Contribute to Docs

-

Fork the official AiMicromind Docs Repo

-

Clone your forked repository

-

Create a new branch

-

Switch to the branch you just created

-

Go into repository folder

cd AiMicromindDocs -

Make changes

-

Commit changes and submit Pull Request from forked branch pointing to AiMicromind Docs main

👨💻 Contribute to Code

To learn how to contribute code, go to the For Developers section and follow the instructions.

If you are contributing to a new node integration, read the Building Node guide.

🏷️ Pull Request process

A member of the AiMicromind team will automatically be notified/assigned when you open a pull request. You can also reach out to us on Discord.

📜 Code of Conduct

This project and everyone participating in it are governed by the Code of Conduct which can be found in the file. By participating, you are expected to uphold this code.

Please report unacceptable behavior to hello@aimicromind.com.

Building Node

Install Git

First, install Git and clone aimicromind repository. You can follow the steps from the Get Started guide.

Structure

AiMicromind separate every node integration under the folder packages/components/nodes. Let's try to create a simple Tool!

Create Calculator Tool

Create a new folder named Calculator under the packages/components/nodes/tools folder. Then create a new file named Calculator.ts. Inside the file, we will first write the base class.

import { INode } from '../../../src/Interface'

import { getBaseClasses } from '../../../src/utils'

class Calculator_Tools implements INode {

label: string

name: string

version: number

description: string

type: string

icon: string

category: string

author: string

baseClasses: string[]

constructor() {

this.label = 'Calculator'

this.name = 'calculator'

this.version = 1.0

this.type = 'Calculator'

this.icon = 'calculator.svg'

this.category = 'Tools'

this.author = 'Your Name'

this.description = 'Perform calculations on response'

this.baseClasses = [this.type, ...getBaseClasses(Calculator)]

}

}

module.exports = { nodeClass: Calculator_Tools }

Every node will implements the INode base class. Breakdown of what each property means:

| Property | Description |

|---|---|

| label | The name of the node that appears on the UI |

| name | The name that is used by code. Must be camelCase |

| version | Version of the node |

| type | Usually the same as label. To define which node can be connected to this specific type on UI |

| icon | Icon of the node |

| category | Category of the node |

| author | Creator of the node |

| description | Node description |

| baseClasses | The base classes from the node, since a node can extends from a base component. Used to define which node can be connected to this node on UI |

Define Class

Now the component class is partially finished, we can go ahead to define the actual Tool class, in this case - Calculator.

Create a new file under the same Calculator folder, and named as core.ts

import { Parser } from "expr-eval"

import { Tool } from "@langchain/core/tools"

export class Calculator extends Tool {

name = "calculator"

description = `Useful for getting the result of a math expression. The input to this tool should be a valid mathematical expression that could be executed by a simple calculator.`

async _call(input: string) {

try {

return Parser.evaluate(input).toString()

} catch (error) {

return "I don't know how to do that."

}

}

}

Finishing

Head back to the Calculator.ts file, we can finish this up by having the async init function. In this function, we will initialize the Calculator class we created above. When the flow is being executed, the init function in each node will be called, and the _call function will be executed when LLM decides to call this tool.

import { INode } from '../../../src/Interface'

import { getBaseClasses } from '../../../src/utils'

import { Calculator } from './core'

class Calculator_Tools implements INode {

label: string

name: string

version: number

description: string

type: string

icon: string

category: string

author: string

baseClasses: string[]

constructor() {

this.label = 'Calculator'

this.name = 'calculator'

this.version = 1.0

this.type = 'Calculator'

this.icon = 'calculator.svg'

this.category = 'Tools'

this.author = 'Your Name'

this.description = 'Perform calculations on response'

this.baseClasses = [this.type, ...getBaseClasses(Calculator)]

}

async init() {

return new Calculator()

}

}

module.exports = { nodeClass: Calculator_Tools }

Build and Run

In the .env file inside packages/server, create a new env variable:

SHOW_COMMUNITY_NODES=true

Now we can use pnpm build and pnpm start to bring the component alive!

(1) (1) (2).png)

API Reference

Using aimicromind public API, you can programmatically execute many of the same tasks as you can in the GUI. This section introduces aimicromindREST API.

- Assistants

- Chat Message

- Chatflows

- Document Store

- Feedback

- Leads

- Ping

- Prediction

- Tools

- Upsert History

- Variables

Assistants

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/assistants" method="post" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1).yml" path="/assistants" method="get" %} swagger (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1).yml" path="/assistants/{id}" method="get" %} swagger (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1).yml" path="/assistants/{id}" method="put" %} swagger (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1).yml" path="/assistants/{id}" method="delete" %} swagger (1) (1).yml {% endswagger %}

Attachments

{% swagger src="../.gitbook/assets/swagger (1).yml" path="/attachments/{chatflowId}/{chatId}" method="post" %} swagger (1).yml {% endswagger %}

Chat Message

{% swagger src="../.gitbook/assets/swagger (3).yml" path="/chatmessage/{id}" method="get" %} swagger (3).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (4).yml" path="/chatmessage/{id}" method="delete" %} swagger (4).yml {% endswagger %}

Chatflows

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/chatflows" method="get" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/chatflows" method="post" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/chatflows/{id}" method="get" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/chatflows/{id}" method="put" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/chatflows/{id}" method="delete" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/chatflows/apikey/{apikey}" method="get" %} swagger (1) (1) (1).yml {% endswagger %}

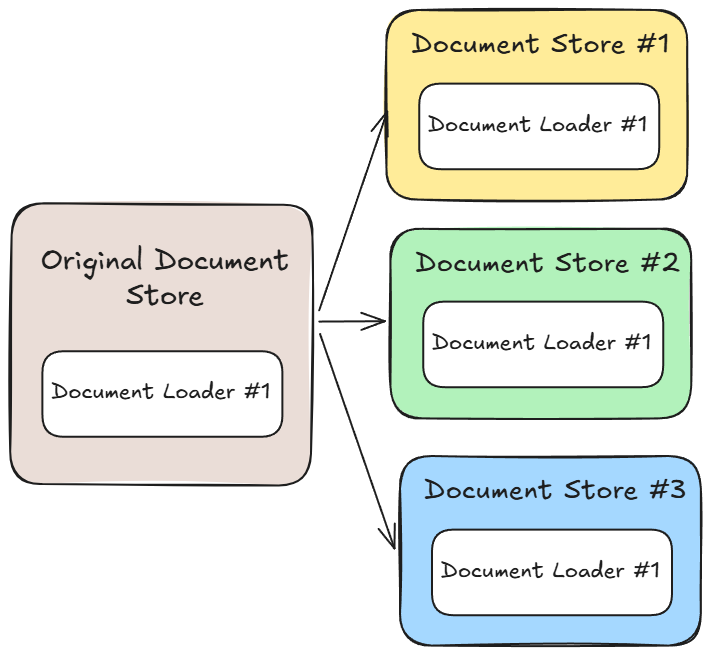

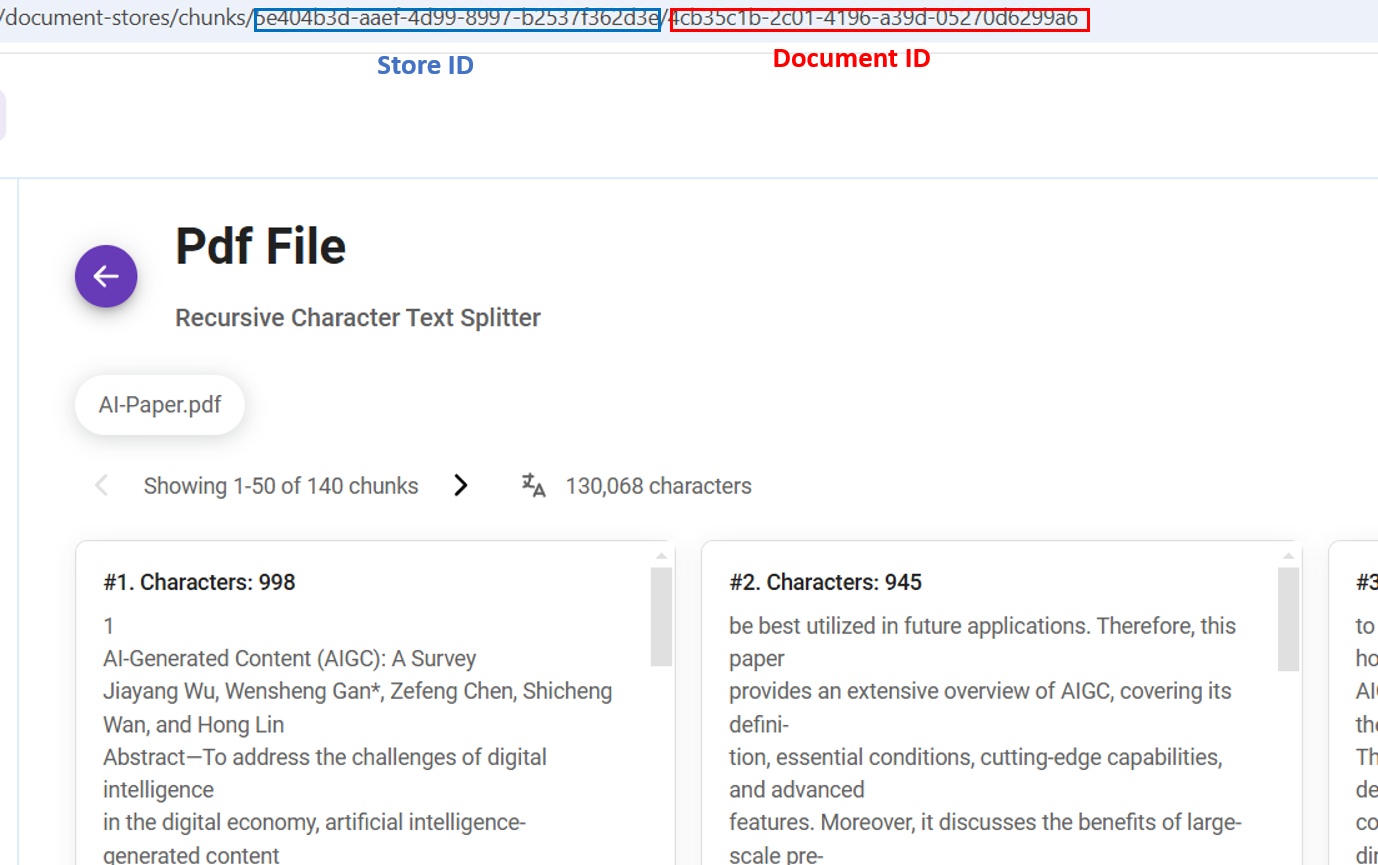

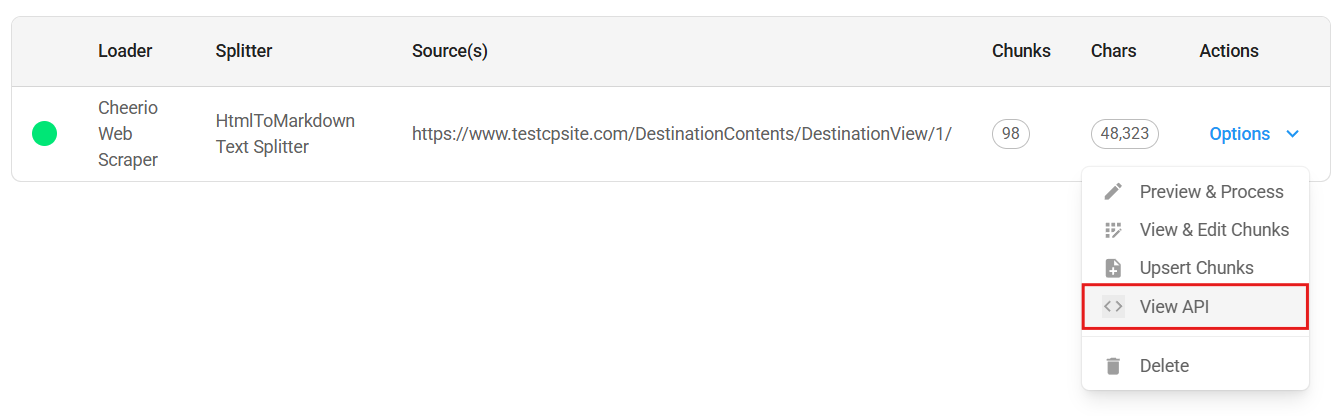

Document Store

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/document-store/store" method="post" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/document-store/store" method="get" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/document-store/store/{id}" method="get" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/document-store/store/{id}" method="put" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/document-store/store/{id}" method="delete" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (5).yml" path="/document-store/upsert/{id}" method="post" %} swagger (5).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (3).yml" path="/document-store/refresh/{id}" method="post" %} swagger (3).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/document-store/vectorstore/query" method="post" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (2).yml" path="/document-store/loader/{storeId}/{loaderId}" method="delete" %} swagger (2).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/document-store/vectorstore/{id}" method="delete" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (2).yml" path="/document-store/chunks/{storeId}/{loaderId}/{pageNo}" method="get" %} swagger (2).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (2).yml" path="/document-store/chunks/{storeId}/{loaderId}/{chunkId}" method="put" %} swagger (2).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (2).yml" path="/document-store/chunks/{storeId}/{loaderId}/{chunkId}" method="delete" %} swagger (2).yml {% endswagger %}

Feedback

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/feedback/{id}" method="get" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/feedback" method="post" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/feedback/{id}" method="put" %} swagger (1) (1) (1).yml {% endswagger %}

Leads

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/leads/{id}" method="get" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/leads" method="post" %} swagger (1) (1) (1).yml {% endswagger %}

Ping

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/ping" method="get" %} swagger (1) (1) (1).yml {% endswagger %}

Prediction

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/prediction/{id}" method="post" %} swagger (1) (1) (1).yml {% endswagger %}

Tools

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/tools" method="post" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/tools" method="get" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/tools/{id}" method="get" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/tools/{id}" method="put" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/tools/{id}" method="delete" %} swagger (1) (1) (1).yml {% endswagger %}

Upsert History

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/upsert-history/{id}" method="get" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/upsert-history/{id}" method="patch" %} swagger (1) (1) (1).yml {% endswagger %}

Variables

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/variables" method="post" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/variables" method="get" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/variables/{id}" method="put" %} swagger (1) (1) (1).yml {% endswagger %}

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/variables/{id}" method="delete" %} swagger (1) (1) (1).yml {% endswagger %}

Vector Upsert

{% swagger src="../.gitbook/assets/swagger (1) (1) (1).yml" path="/vector/upsert/{id}" method="post" %} swagger (1) (1) (1).yml {% endswagger %}

description: Learn about some core functionalities built into AiMicromind

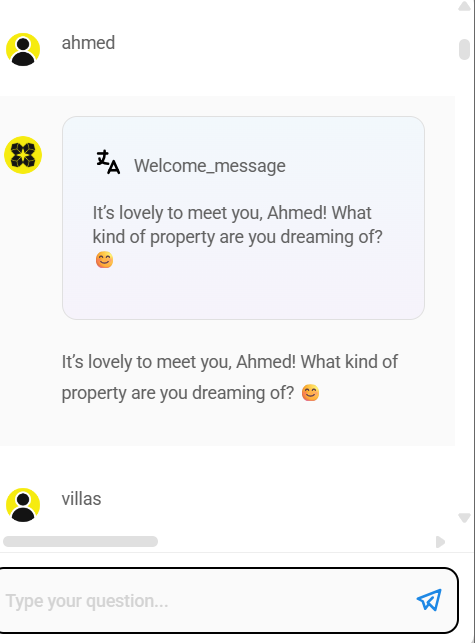

Using AiMicromind

This section provides in-depth guides on core aimicromind functionalities, including API usage, variables, and telemetry collection practices.

Guides

description: Learn about how to build agentic systems in Aimicromind

Agentflows

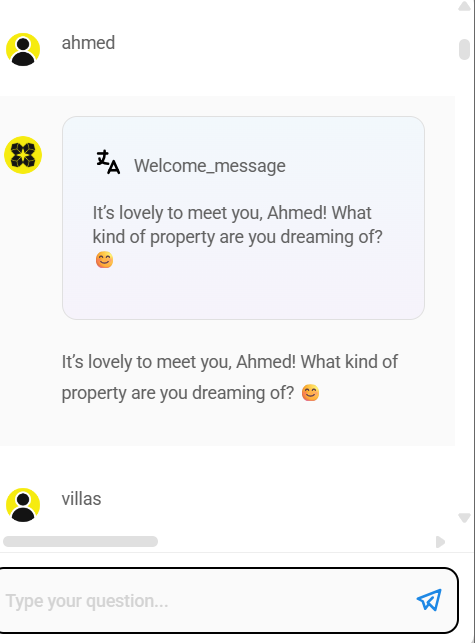

Introducing Agentic Systems in AiMicromind

AiMicromind's Agentflows section provides a platform for building agent-based systems that can interact with external tools and data sources.

Currently, AiMicromind offers two approaches for designing these systems: Multi-Agents1 and Sequential Agents2. These approaches provide different levels of control and complexity, allowing you to choose the best fit for your needs.

aimicromindAPP

{% hint style="success" %} This documentation will explore both the Sequential Agent and Multi-Agent approaches, explaining their features and how they can be used to build different types of conversational workflows. {% endhint %}

-

Multi-Agents, built on top of the Sequential Agent architecture, simplify the process of building and managing teams of agents by pre-configuring core elements and providing a higher-level abstraction. ↩

-

Sequential Agents provide developers with direct access to the underlying workflow structure, enabling granular control over every step of the conversation flow and offering maximum flexibility for building highly customized conversational applications. ↩

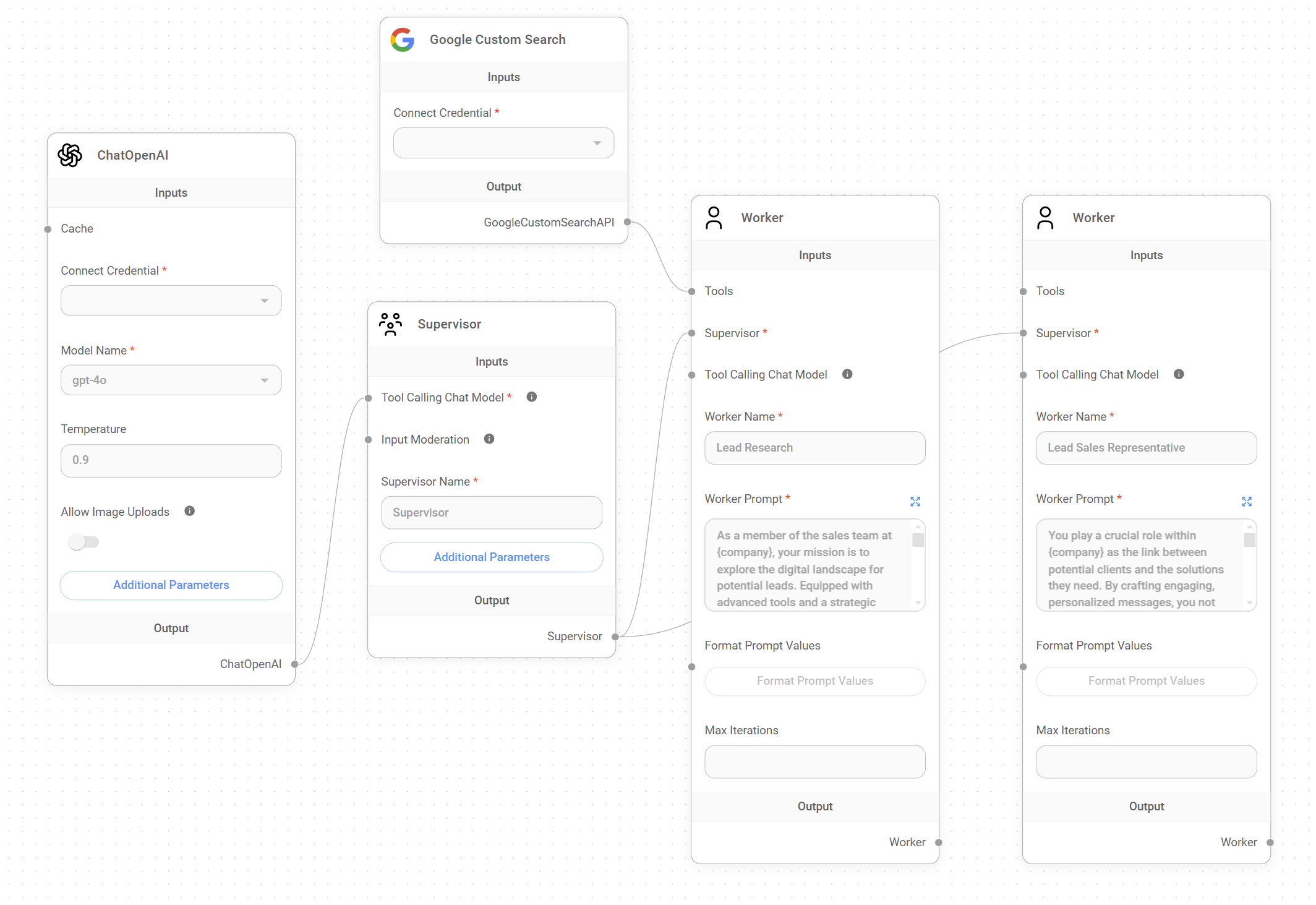

description: Learn how to use Multi-Agents in Aimicromind, written by @toi500

Multi-Agents

This guide intends to provide an introduction of the multi-agent AI system architecture within Aimicromind, detailing its components, operational constraints, and workflow.

Concept

Analogous to a team of domain experts collaborating on a complex project, a multi-agent system uses the principle of specialization within artificial intelligence.

This multi-agent system utilizes a hierarchical, sequential workflow, maximizing efficiency and specialization.

1. System Architecture

We can define the multi-agent AI architecture as a scalable AI system capable of handling complex projects by breaking them down into manageable sub-tasks.

In Aimicromind, a multi-agent system comprises two primary nodes or agent types and a user, interacting in a hierarchical graph to process requests and deliver a targeted outcome:

- User: The user acts as the system's starting point, providing the initial input or request. While a multi-agent system can be designed to handle a wide range of requests, it's important that these user requests align with the system's intended purpose. Any request falling outside this scope can lead to inaccurate results, unexpected loops, or even system errors. Therefore, user interactions, while flexible, should always align with the system's core functionalities for optimal performance.

- Supervisor AI: The Supervisor acts as the system's orchestrator, overseeing the entire workflow. It analyzes user requests, decomposes them into a sequence of sub-tasks, assigns these sub-tasks to the specialized worker agents, aggregates the results, and ultimately presents the processed output back to the user.

- Worker AI Team: This team consists of specialized AI agents, or Workers, each instructed - via prompt messages - to handle a specific task within the workflow. These Workers operate independently, receiving instructions and data from the Supervisor, executing their specialized functions, using tools as needed, and returning the results to the Supervisor.

2. Operational Constraints

To maintain order and simplicity, this multi-agent system operates under two important constraints:

- One task at a time: The Supervisor is intentionally designed to focus on a single task at a time. It waits for the active Worker to complete its task and return the results before it analyzes the next step and delegates the subsequent task. This ensures each step is completed successfully before moving on, preventing overcomplexity.

- One Supervisor per flow: While it's theoretically possible to implement a set of nested multi-agent systems to form a more sophisticated hierarchical structure for highly complex workflows, what LangChain defines as "Hierarchical Agent Teams", with a top-level supervisor and mid-level supervisors managing teams of workers, Aimicromind's multi-agent systems currently operate with a single Supervisor.

{% hint style="info" %} These two constraints are important when planning your application's workflow. If you try to design a workflow where the Supervisor needs to delegate multiple tasks simultaneously, in parallel, the system won't be able to handle it and you'll encounter an error. {% endhint %}

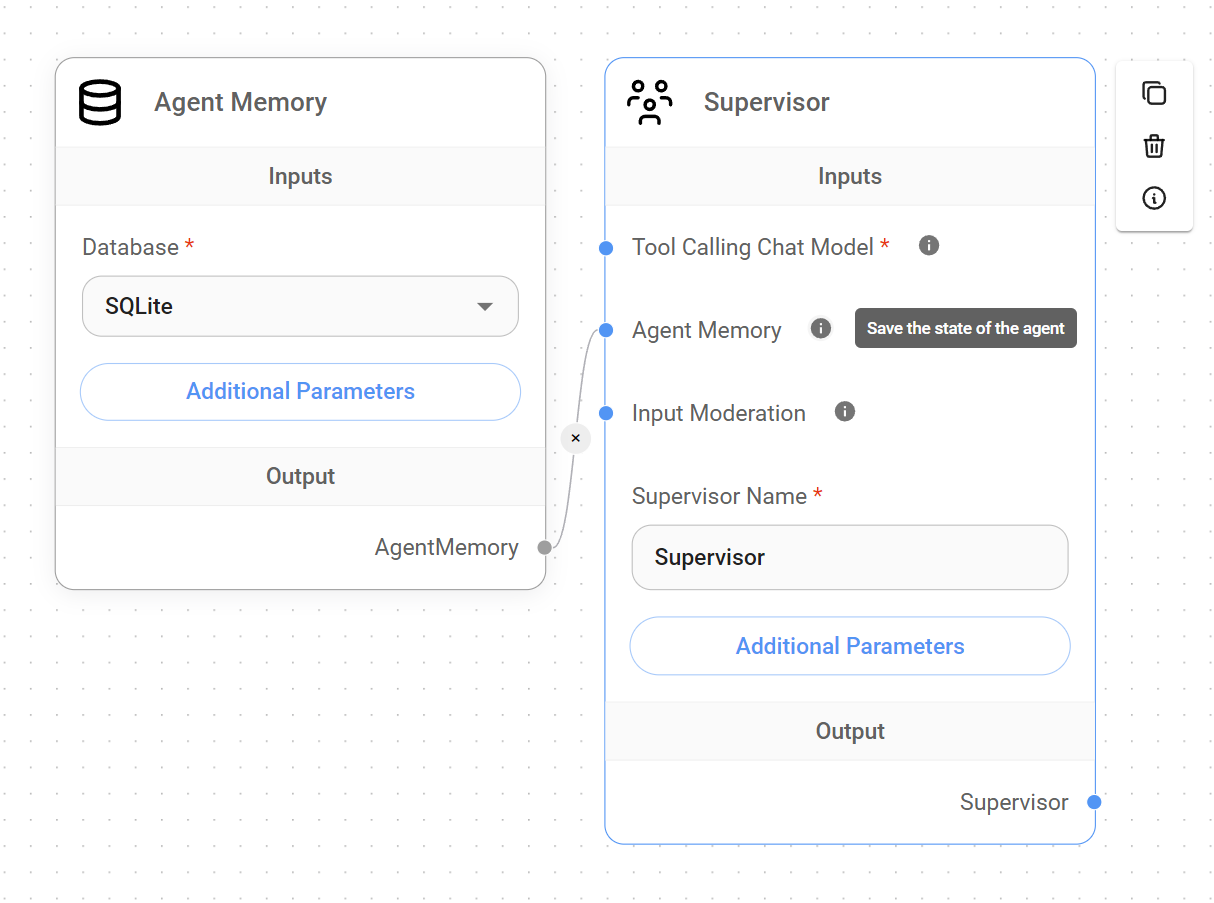

The Supervisor

The Supervisor, as the agent governing the overall workflow and responsible for delegating tasks to the appropriate Worker, requires a set of components to function correctly:

- Chat Model capable of function calling to manage the complexities of task decomposition, delegation, and result aggregation.

- Agent Memory (optional): While the Supervisor can function without Agent Memory, this node can significantly enhance workflows that require access to past Supervisor states. This state preservation could allow the Supervisor to resume the job from a specific point or leverage past data for improved decision-making.

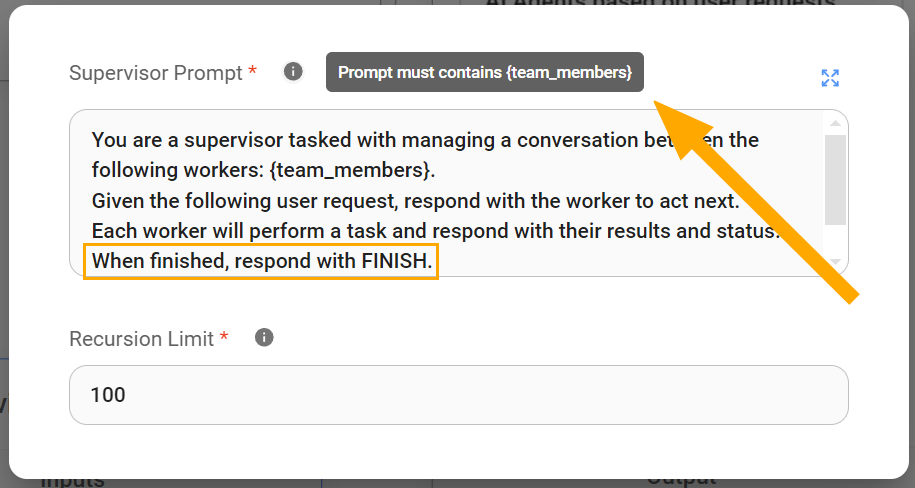

Supervisor Prompt

By default, the Supervisor Prompt is worded in a way that instructs the Supervisor to analyze user requests, decompose them into a sequence of sub-tasks, and assign these sub-tasks to the specialized worker agents.

While the Supervisor Prompt is customizable to fit specific application needs, it always requires the following two key elements:

- The {team_members} Variable: This variable is crucial for the Supervisor's understanding of the available workforce since it provides the Supervisor with list of Worker names. This allows the Supervisor to diligently delegate tasks to the most appropriate Worker based on their expertise.

- The "FINISH" Keyword: This keyword serves as a signal within the Supervisor Prompt. It indicates when the Supervisor should consider the task complete and present the final output to the user. Without a clear "FINISH" directive, the Supervisor might continue delegating tasks unnecessarily or fail to deliver a coherent and finalized result to the user. It signals that all necessary sub-tasks have been executed and the user's request has been fulfilled.

{% hint style="info" %} It's important to understand that the Supervisor plays a very distinct role from Workers. Unlike Workers, which can be tailored with highly specific instructions, the Supervisor operates most effectively with general directives, which allow it to plan and delegate tasks as it deems appropriate. If you're new to multi-agent systems, we recommend sticking with the default Supervisor prompt {% endhint %}

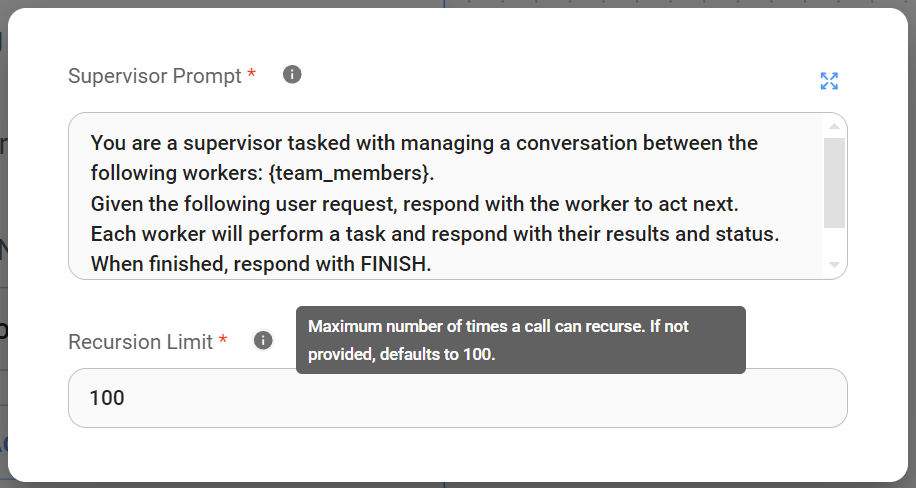

Understanding Recursion Limit in Supervisor node:

This parameter restricts the maximum depth of nested function calls within our application. In our current context, it limits how many times the Supervisor can trigger itself within a single workflow execution. This is important for preventing unbounded recursion and ensuring resources are used efficiently.

How the Supervisor works

Upon receiving a user query, the Supervisor initiates the workflow by analyzing the request and discerning the user's intended outcome.

Then, leveraging the {team_members} variable in the Supervisor Prompt, which only provides a list of available Worker AI names, the Supervisor infers each Worker's specialty and strategically selects the most suitable Worker for each task within the workflow.

{% hint style="info" %} Since the Supervisor only has the Workers' names to infer their functionality inside the workflow, it is very important that those names are set accordingly. Clear, concise, and descriptive names that accurately reflect the Worker's role or area of expertise are crucial for the Supervisor to make informed decisions when delegating tasks. This ensures that the right Worker is selected for the right job, maximizing the system's accuracy in fulfilling the user's request. {% endhint %}

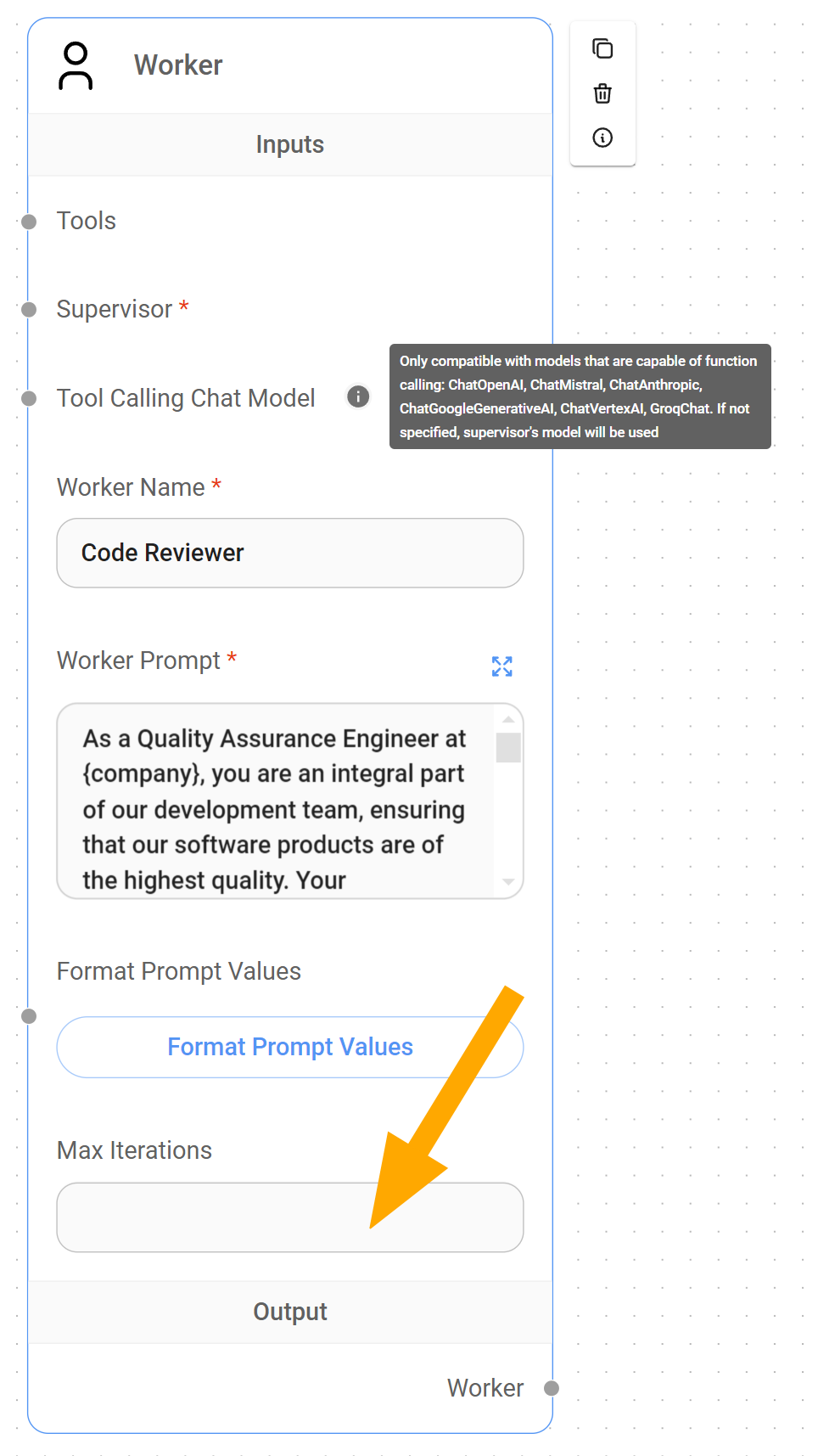

The Worker

The Worker, as a specialized agent instructed to handle a specific task within the system, requires two essential components to function correctly:

- A Supervisor: Each Worker must be connected to the Supervisor so it can be called upon when a task needs to be delegated. This connection establishes the essential hierarchical relationship within the multi-agent system, ensuring that the Supervisor can efficiently distribute work to the appropriate specialized Workers.

- A Chat Model node capable of function calling: By default, Workers inherit the Supervisor's Chat Model node unless assigned one directly. This function-calling capability enables the Worker to interact with tools designed for its specialized task.

{% hint style="info" %} The ability to assign different Chat Models to each Worker provides significant flexibility and optimization opportunities for our application. By selecting Chat Models tailored to specific tasks, we can leverage more cost-effective solutions for simpler tasks and reserve specialized, potentially more expensive, models when truly necessary. {% endhint %}

Understanding Max Iteration parameter in Workers

LangChain refers to Max Iterations Cap as a important control mechanism for preventing haywire within an agentic system. In our current this context, it serves us as a guardrail against excessive, potentially infinite, interactions between the Supervisor and Worker.

Unlike the Supervisor node's Recursion Limit, which restricts how many times the Supervisor can call itself, the Worker node's Max Iteration parameter limits how many times a Supervisor can iterated or query a specific Worker.

By capping or limiting the Max Iteration, we ensure that costs remain under control, even in cases of unexpected system behavior.

Example: A practical user case

Now that we've established a foundational understanding of how Multi-Agent systems work within Aimicromind, let's explore a practical application.

Imagine a Lead Outreach multi-agent system (available in the Marketplace) designed to automate the process of identifying, qualifying, and engaging with potential leads. This system would utilize a Supervisor to orchestrate the following two Workers:

- Lead Researcher: This Worker, using the Google Search Tool, will be responsible for gathering potential leads based on user-defined criteria.

- Lead Sales Generator: This Worker will utilize the information gathered by the Lead Researcher to create personalized email drafts for the sales team.

Background: A user working at Solterra Renewables wants to gather available information about Evergreen Energy Group, a reputable renewable energy company located in the UK, and target its CEO, Amelia Croft, as a potential lead.

User Request: The Solterra Renewables employee provides the following query to the multi-agent system: "I need information about Evergreen Energy Group and Amelia Croft as a potential new customer for our business."

- Supervisor:

- The Supervisor receives the user request and delegates the "Lead Research" task to the

Lead Researcher Worker.

- The Supervisor receives the user request and delegates the "Lead Research" task to the

- Lead Researcher Worker:

- The Lead Researcher Worker, using the Google Search Tool, gathers information about Evergreen Energy Group, focusing on:

- Company background, industry, size, and location.

- Recent news and developments.

- Key executives, including confirming Amelia Croft's role as CEO.

- The Lead Researcher sends the gathered information back to the

Supervisor.

- The Lead Researcher Worker, using the Google Search Tool, gathers information about Evergreen Energy Group, focusing on:

- Supervisor:

- The Supervisor receives the research data from the Lead Researcher Worker and confirms that Amelia Croft is a relevant lead.

- The Supervisor delegates the "Generate Sales Email" task to the

Lead Sales Generator Worker, providing:- The research information on Evergreen Energy Group.

- Amelia Croft's email.

- Context about Solterra Renewables.

- Lead Sales Generator Worker:

- The Lead Sales Generator Worker crafts a personalized email draft tailored to Amelia Croft, taking into account:

- Her role as CEO and the relevance of Solterra Renewables' services to her company.

- Information from the research about Evergreen Energy Group's current focus or projects.

- The Lead Sales Generator Worker sends the completed email draft back to the

Supervisor.

- The Lead Sales Generator Worker crafts a personalized email draft tailored to Amelia Croft, taking into account:

- Supervisor:

- The Supervisor receives the generated email draft and issues the "FINISH" directive.

- The Supervisor outputs the email draft back to the user, the

Solterra Renewables employee.

- User Receives Output: The Solterra Renewables employee receives a personalized email draft ready to be reviewed and sent to Amelia Croft.

Video Tutorials (Coming soon)

description: Learn the Fundamentals of Sequential Agents in Aimicromind, written by @toi500

Sequential Agents

This guide offers a complete overview of the Sequential Agent AI system architecture within Aimicromind, exploring its core components and workflow design principles.

{% hint style="warning" %} Disclaimer: This documentation is intended to help aimicromind users understand and build conversational workflows using the Sequential Agent system architecture. It is not intended to be a comprehensive technical reference for the LangGraph framework and should not be interpreted as defining industry standards or core LangGraph concepts. {% endhint %}

Concept

Built on top of LangGraph, Aimicromind's Sequential Agents architecture facilitates the development of conversational agentic systems by structuring the workflow as a directed cyclic graph (DCG), allowing controlled loops and iterative processes.

This graph, composed of interconnected nodes, defines the sequential flow of information and actions, enabling the agents to process inputs, execute tasks, and generate responses in a structured manner.

Understanding Sequential Agents' DCG Architecture

This architecture simplifies the management of complex conversational workflows by defining a clear and understandable sequence of operations through its DCG structure.

Let's explore some key elements of this approach:

{% tabs %} {% tab title="Core Principles" %}

- Node-based processing: Each node in the graph represents a discrete processing unit, encapsulating its own functionality like language processing, tool execution, or conditional logic.

- Data flow as connections: Edges in the graph represent the flow of data between nodes, where the output of one node becomes the input for the subsequent node, enabling a chain of processing steps.

- State management: State is managed as a shared object, persisting throughout the conversation. This allows nodes to access relevant information as the workflow progresses. {% endtab %}

{% tab title="Terminology" %}

- Flow: The movement or direction of data within the workflow. It describes how information passes between nodes during a conversation.

- Workflow: The overall design and structure of the system. It's the blueprint that defines the sequence of nodes, their connections, and the logic that orchestrates the conversation flow.

- State: A shared data structure that represents the current snapshot of the conversation. It includes the conversation history

state.messagesand any custom State variables defined by the user. - Custom State: User-defined key-value pairs added to the state object to store additional information relevant to the workflow.

- Tool: An external system, API, or service that can be accessed and executed by the workflow to perform specific tasks, such as retrieving information, processing data, or interacting with other applications.

- Human-in-the-Loop (HITL): A feature that allows human intervention in the workflow, primarily during tool execution. It enables a human reviewer to approve or reject a tool call before it's executed.

- Parallel node execution: It refers to the ability to execute multiple nodes concurrently within a workflow by using a branching mechanism. This means that different branches of the workflow can process information or interact with tools simultaneously, even though the overall flow of execution remains sequential. {% endtab %} {% endtabs %}

Sequential Agents vs Multi-Agents

While both Multi-Agent and Sequential Agent systems in aimicromind are built upon the LangGraph framework and share the same fundamental principles, the Sequential Agent architecture provides a lower level of abstraction1, offering more granular control over every step of the workflow.

Multi-Agent systems, which are characterized by a hierarchical structure with a central supervisor agent delegating tasks to specialized worker agents, excel at handling complex workflows by breaking them down into manageable sub-tasks. This decomposition into sub-tasks is made possible by pre-configuring core system elements under the hood, such as condition nodes, which would require manual setup in a Sequential Agent system. As a result, users can more easily build and manage teams of agents.

In contrast, Sequential Agent systems operate like a streamlined assembly line, where data flows sequentially through a chain of nodes, making them ideal for tasks demanding a precise order of operations and incremental data refinement. Compared to the Multi-Agent system, its lower-level access to the underlying workflow structure makes it fundamentally more flexible and customizable, offering parallel node execution and full control over the system logic, incorporating conditions, state, and loop nodes into the workflow, allowing for the creation of new dynamic branching capabilities.

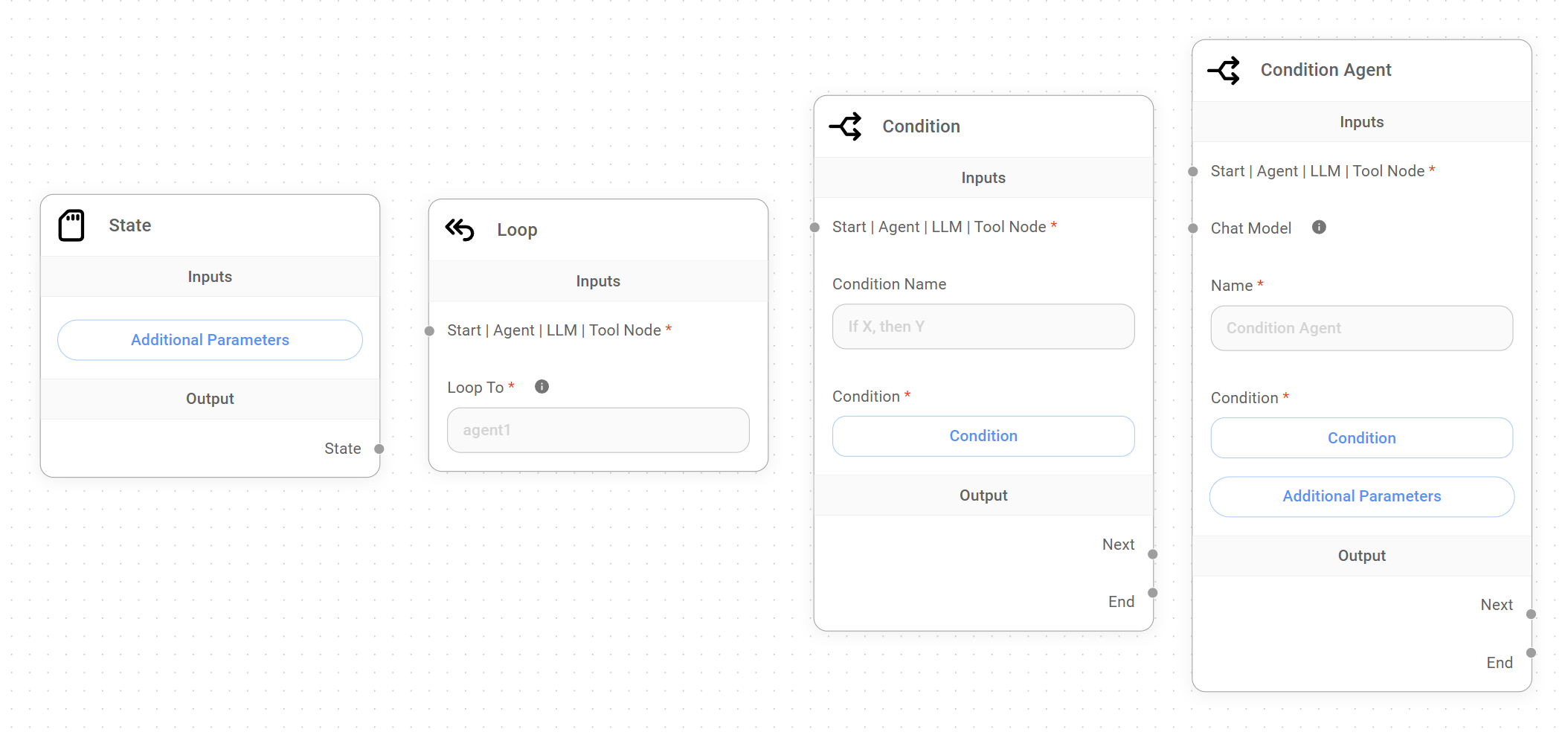

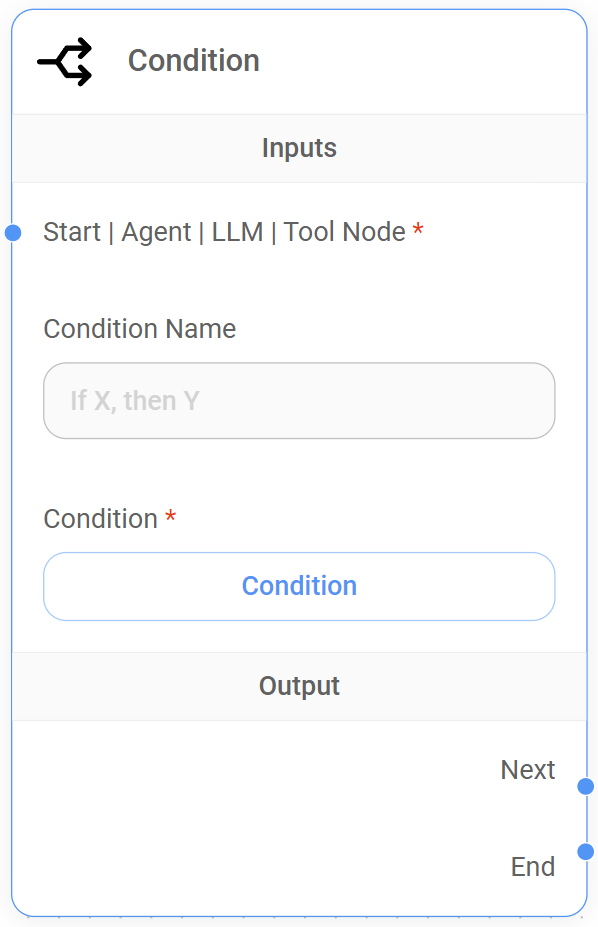

Introducing State, Loop and Condition Nodes

Aimicromind's Sequential Agents offer new capabilities for creating conversational systems that can adapt to user input, make decisions based on context, and perform iterative tasks.

These capabilities are made possible by the introduction of four new core nodes; the State Node, the Loop Node, and two Condition Nodes.

- State Node: We define State as a shared data structure that represents the current snapshot of our application or workflow. The State Node allows us to add a custom State to our workflow from the start of the conversation. This custom State is accessible and modifiable by other nodes in the workflow, enabling dynamic behavior and data sharing.

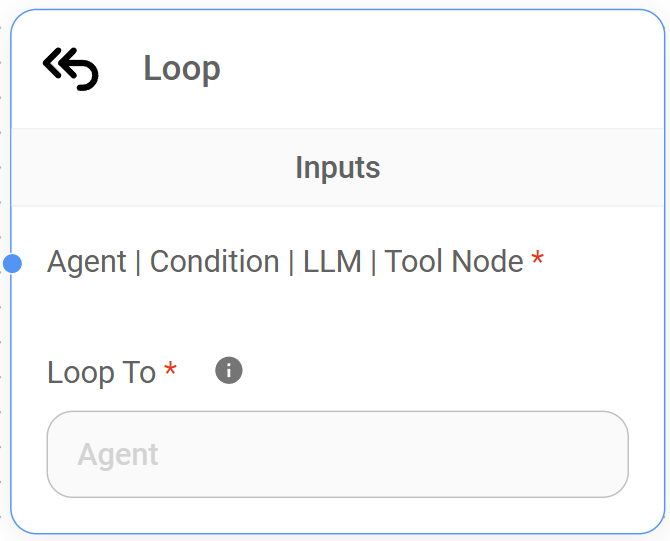

- Loop Node: This node introduces controlled cycles within the Sequential Agent workflow, enabling iterative processes where a sequence of nodes can be repeated based on specific conditions. This allows agents to refine outputs, gather additional information from the user, or perform tasks multiple times.

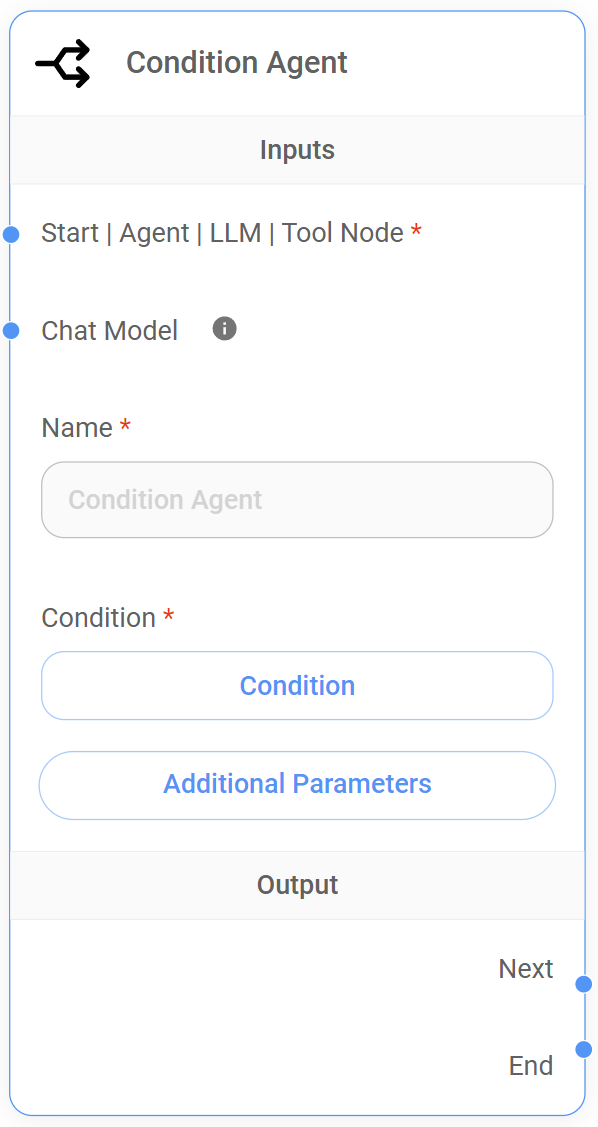

- Condition Nodes: The Condition and Condition Agent Node provide the necessary control to create complex conversational flows with branching paths. The Condition Node evaluates conditions directly, while the Condition Agent Node uses an agent's reasoning to determine the branching logic. This allows us to dynamically guide the flow's behavior based on user input, the custom State, or results of actions taken by other nodes.

Choosing the right system

Selecting the ideal system for your application depends on understanding your specific workflow needs. Factors like task complexity, the need for parallel processing, and your desired level of control over data flow are all key considerations.

- For simplicity: If your workflow is relatively straightforward, where tasks can be completed one after the other and therefore does not require parallel node execution or Human-in-the-Loop (HITL), the Multi-Agent approach offers ease of use and quick setup.

- For flexibility: If your workflow needs parallel execution, dynamic conversations, custom State management, and the ability to incorporate HITL, the Sequential Agent approach provides the necessary flexibility and control.

Here's a table comparing Multi-Agent and Sequential Agent implementations in Aimicromind, highlighting key differences and design considerations:

| Multi-Agent | Sequential Agent | |

|---|---|---|

| Structure | Hierarchical; Supervisor delegates to specialized Workers. | Linear, cyclic and/or branching; nodes connect in a sequence, with conditional logic for branching. |

| Workflow | Flexible; designed for breaking down a complex task into a sequence of sub-tasks, completed one after another. | Highly flexible; supports parallel node execution, complex dialogue flows, branching logic, and loops within a single conversation turn. |

| Parallel Node Execution | No; Supervisor handles one task at a time. | Yes; can trigger multiple actions in parallel within a single run. |

| State Management | Implicit; State is in place, but is not explicitly managed by the developer. | Explicit; State is in place, and developers can define and manage an initial or custom State using the State Node and the "Update State" field in various nodes. |

| Tool Usage | Workers can access and use tools as needed. | Tools are accessed and executed through Agent Nodes and Tool Nodes. |

| Human-in-the-Loop (HITL) | HITL is not supported. | Supported through the Agent Node and Tool Node's "Require Approval" feature, allowing human review and approval or rejection of tool execution. |

| Complexity | Higher level of abstraction; simplifies workflow design. | Lower level of abstraction; more complex workflow design, requiring careful planning of node interactions, custom State management, and conditional logic. |

| Ideal Use Cases |

|

|

{% hint style="info" %} Note: Even though Multi-Agent systems are technically a higher-level layer built upon the Sequential Agent architecture, they offer a distinct user experience and approach to workflow design. The comparison above treats them as separate systems to help you select the best option for your specific needs. {% endhint %}

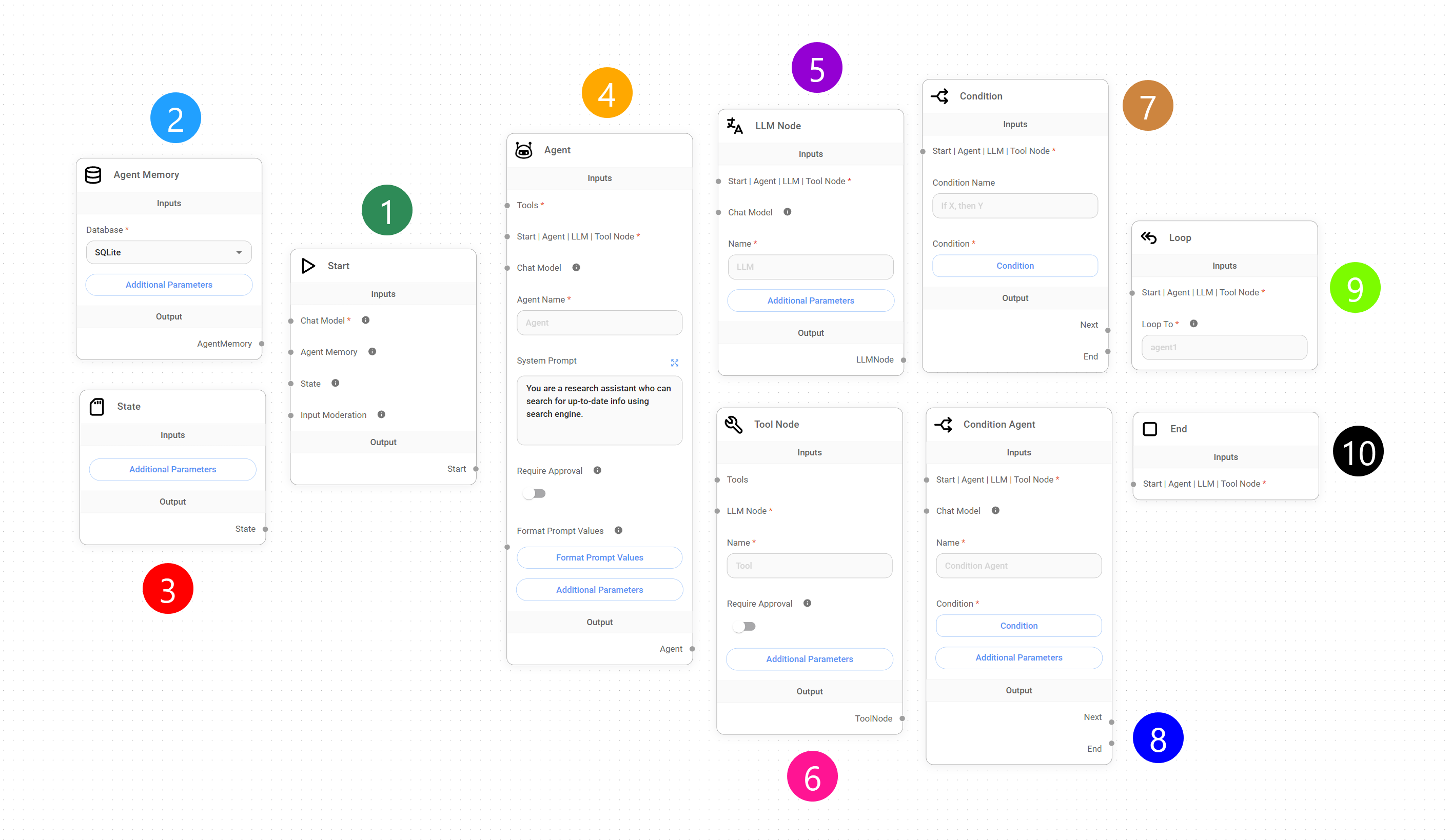

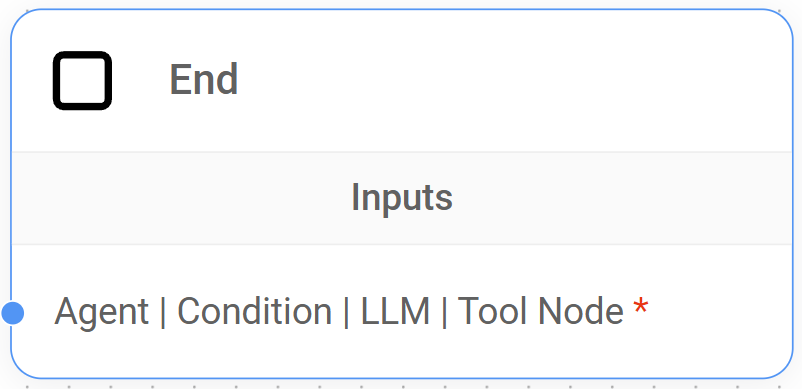

Sequential Agents Nodes

Sequential Agents bring a whole new dimension to Aimicromind, introducing 10 specialized nodes, each serving a specific purpose, offering more control over how our conversational agents interact with users, process information, make decisions, and execute actions.

The following sections aim to provide a comprehensive understanding of each node's functionality, inputs, outputs, and best practices, ultimately enabling you to craft sophisticated conversational workflows for a variety of applications.

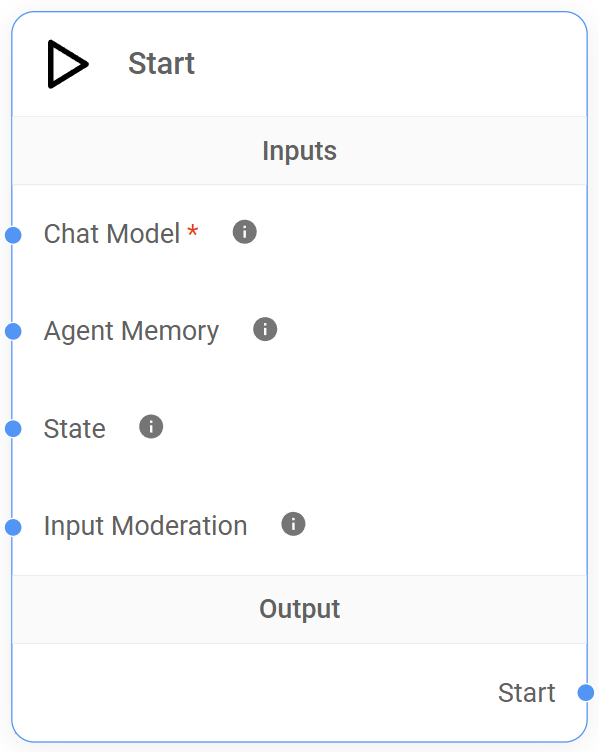

1. Start Node

As its name implies, the Start Node is the entry point for all workflows in the Sequential Agent architecture. It receives the initial user query, initializes the conversation State, and sets the flow in motion.

Understanding the Start Node

The Start Node ensures that our conversational workflows have the necessary setup and context to function correctly. It's responsible for setting up key functionalities that will be used throughout the rest of the workflow:

- Defining the default LLM: The Start Node requires us to specify a Chat Model (LLM) compatible with function calling, enabling agents in the workflow to interact with tools and external systems. It will be the default LLM used under the hood in the workflow.

- Initializing Memory: We can optionally connect an Agent Memory Node to store and retrieve conversation history, enabling more context-aware responses.

- Setting a custom State: By default, the State contains an immutable

state.messagesarray, which acts as the transcript or history of the conversation between the user and the agents. The Start Node allows you to connect a custom State to the workflow adding a State Node, enabling the storage of additional information relevant to your workflow - Enabling moderation: Optionally, we can connect Input Moderation to analyze the user's input and prevent potentially harmful content from being sent to the LLM.

Inputs

| Required | Description | |

|---|---|---|

| Chat Model | Yes | The default LLM that will power the conversation. Only compatible with models that are capable of function calling. |

| Agent Memory Node | No | Connect an Agent Memory Node to enable persistence and context preservation. |

| State Node | No | Connect a State Node to set a custom State, a shared context that can be accessed and modified by other nodes in the workflow. |

| Input Moderation | No | Connect a Moderation Node to filter content by detecting text that could generate harmful output, preventing it from being sent to the LLM. |

Outputs

The Start Node can connect to the following nodes as outputs:

- Agent Node: Routes the conversation flow to an Agent Node, which can then execute actions or access tools based on the conversation's context.

- LLM Node: Routes the conversation flow to an LLM Node for processing and response generation.

- Condition Agent Node: Connects to a Condition Agent Node to implement branching logic based on the agent's evaluation of the conversation.

- Condition Node: Connects to a Condition Node to implement branching logic based on predefined conditions.

Best Practices

{% tabs %} {% tab title="Pro Tips" %} Choose the right Chat Model

Ensure your selected LLM supports function calling, a key feature for enabling agent-tool interactions. Additionally, choose an LLM that aligns with the complexity and requirements of your application. You can override the default LLM by setting it at the Agent/LLM/Condition Agent node level when necessary.

Consider context and persistence

If your use case demands it, utilize Agent Memory Node to maintain context and personalize interactions. {% endtab %}

{% tab title="Potential Pitfalls" %} Incorrect Chat Model (LLM) selection

- Problem: The Chat Model selected in the Start Node is not suitable for the intended tasks or capabilities of the workflow, resulting in poor performance or inaccurate responses.

- Example: A workflow requires a Chat Model with strong summarization capabilities, but the Start Node selects a model optimized for code generation, leading to inadequate summaries.

- Solution: Choose a Chat Model that aligns with the specific requirements of your workflow. Consider the model's strengths, weaknesses, and the types of tasks it excels at. Refer to the documentation and experiment with different models to find the best fit.

Overlooking Agent Memory Node configuration

- Problem: The Agent Memory Node is not properly connected or configured, resulting in the loss of conversation history data between sessions.

- Example: You intend to use persistent memory to store user preferences, but the Agent Memory Node is not connected to the Start Node, causing preferences to be reset on each new conversation.

- Solution: Ensure that the Agent Memory Node is connected to the Start Node and configured with the appropriate database (SQLite). For most use cases, the default SQLite database will be sufficient.

Inadequate Input Moderation

- Problem: The "Input Moderation" is not enabled or configured correctly, allowing potentially harmful or inappropriate user input to reach the LLM and generate undesirable responses.

- Example: A user submits offensive language, but the input moderation fails to detect it or is not set up at all, allowing the query to reach the LLM.

- Solution: Add and configure an input moderation node in the Start Node to filter out potentially harmful or inappropriate language. Customize the moderation settings to align with your specific requirements and use cases. {% endtab %} {% endtabs %}

2. Agent Memory Node

The Agent Memory Node provides a mechanism for persistent memory storage, allowing the Sequential Agent workflow to retain the conversation history state.messages and any custom State previously defined across multiple interactions

This long-term memory is essential for agents to learn from previous interactions, maintain context over extended conversations, and provide more relevant responses.

Where the data is recorded

By default, aimicromindutilizes its built-in SQLite database to store conversation history and custom state data, creating a "checkpoints" table to manage this persistent information.

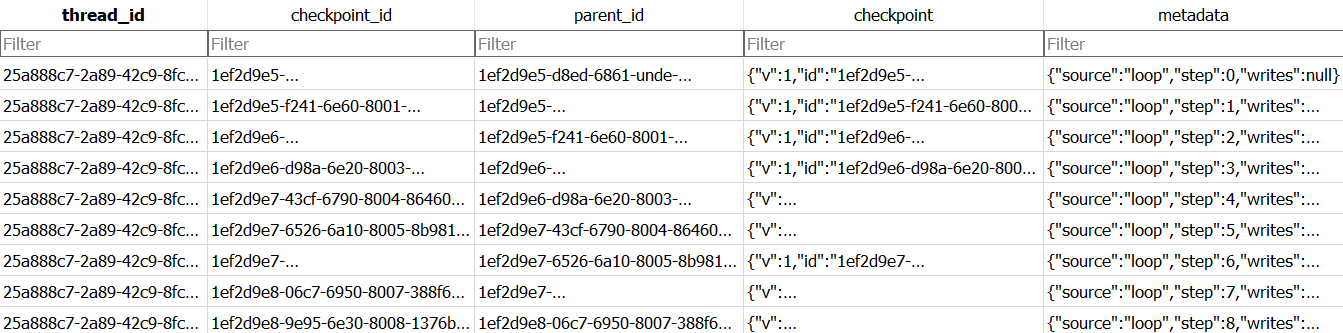

Understanding the "checkpoints" table structure and data format

This table stores snapshots of the system's State at various points during a conversation, enabling the persistence and retrieval of conversation history. Each row represents a specific point or "checkpoint" in the workflow's execution.

Table structure

- thread_id: A unique identifier representing a specific conversation session, our session ID. It groups together all checkpoints related to a single workflow execution.

- checkpoint_id: A unique identifier for each execution step (node execution) within the workflow. It helps track the order of operations and identify the State at each step.

- parent_id: Indicates the checkpoint_id of the preceding execution step that led to the current checkpoint. This establishes a hierarchical relationship between checkpoints, allowing for the reconstruction of the workflow's execution flow.

- checkpoint: Contains a JSON string representing the current State of the workflow at that specific checkpoint. This includes the values of variables, the messages exchanged, and any other relevant data captured at that point in the execution.

- metadata: Provides additional context about the checkpoint, specifically related to node operations.

How it works

As a Sequential Agent workflow executes, the system records a checkpoint in this table for each significant step. This mechanism provides several benefits:

- Execution tracking: Checkpoints enable the system to understand the execution path and the order of operations within the workflow.

- State management: Checkpoints store the State of the workflow at each step, including variable values, conversation history, and any other relevant data. This allows the system to maintain contextual awareness and make informed decisions based on the current State.

- Workflow resumption: If the workflow is paused or interrupted (e.g., due to a system error or user request), the system can use the stored checkpoints to resume execution from the last recorded State. This ensures that the conversation or task continues from where it left off, preserving the user's progress and preventing data loss.

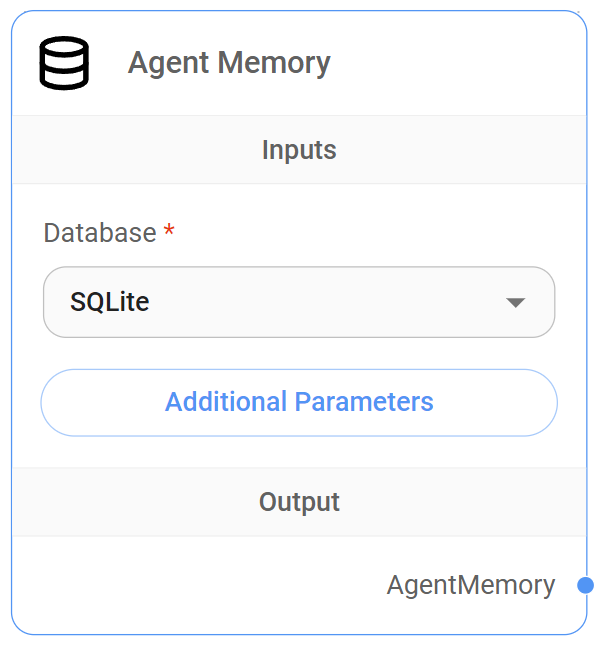

Inputs

The Agent Memory Node has no specific input connections.

Node Setup

| Required | Description | |

|---|---|---|

| Database | Yes | The type of database used for storing conversation history. Currently, only SQLite is supported. |

Additional Parameters

| Required | Description | |

|---|---|---|

| Database File Path | No | The file path to the SQLite database file. If not provided, the system will use a default location. |

Outputs

The Agent Memory Node interacts solely with the Start Node, making the conversation history available from the very beginning of the workflow.

Best Practices

{% tabs %} {% tab title="Pro Tips" %} Strategic use

Employ Agent Memory only when necessary. For simple, stateless interactions, it might be overkill. Reserve it for scenarios where retaining information across turns or sessions is essential. {% endtab %}

{% tab title="Potential Pitfalls" %} Unnecessary overhead

- The Problem: Using Agent Memory for every interaction, even when not needed, introduces unnecessary storage and processing overhead. This can slow down response times and increase resource consumption.

- Example: A simple weather chatbot that provides information based on a single user request doesn't need to store conversation history.

- Solution: Analyze the requirements of your system and only utilize Agent Memory when persistent data storage is essential for functionality or user experience. {% endtab %} {% endtabs %}

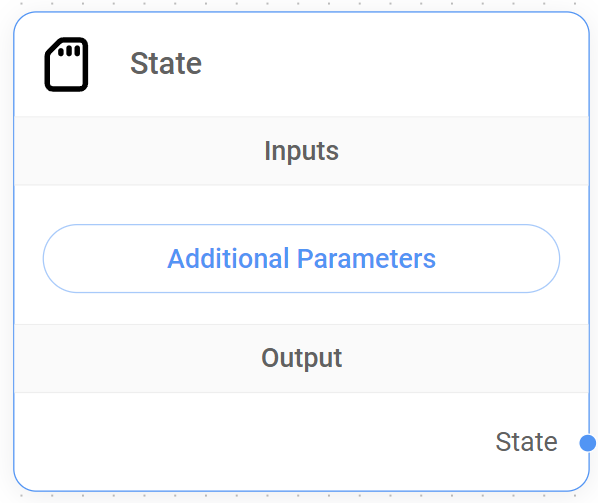

3. State Node

The State Node, which can only be connected to the Start Node, provides a mechanism to set a user-defined or custom State into our workflow from the start of the conversation. This custom State is a JSON object that is shared and can be updated by nodes in the graph, passing from one node to another as the flow progresses.

Understanding the State Node

By default, the State includes a state.messages array, which acts as our conversation history. This array stores all messages exchanged between the user and the agents, or any other actors in the workflow, preserving it throughout the workflow execution.

Since by definition this state.messages array is immutable and cannot be modified, the purpose of the State Node is to allow us to define custom key-value pairs, expanding the state object to hold any additional information relevant to our workflow.

{% hint style="info" %} When no Agent Memory Node is used, the State operates in-memory and is not persisted for future use. {% endhint %}

Inputs

The State Node has no specific input connections.

Outputs

The State Node can only connect to the Start Node, allowing the setup of a custom State from the beginning of the workflow and allowing other nodes to access and potentially modify this shared custom State.

Additional Parameters

| Required | Description | |

|---|---|---|

| Custom State | Yes | A JSON object representing the initial custom State of the workflow. This object can contain any key-value pairs relevant to the application. |

How to set a custom State

Specify the key, operation type, and default value for the state object. The operation type can be either "Replace" or "Append".

- Replace

- Replace the existing value with the new value.

- If the new value is null, the existing value will be retained.

- Append

- Append the new value to the existing value.

- Default values can be empty or an array. Ex: ["a", "b"]

- Final value is an array.

Example using JS

{% code overflow="wrap" %}

{

aggregate: {

value: (x, y) => x.concat(y), // here we append the new message to the existing messages

default: () => []

}

}

{% endcode %}

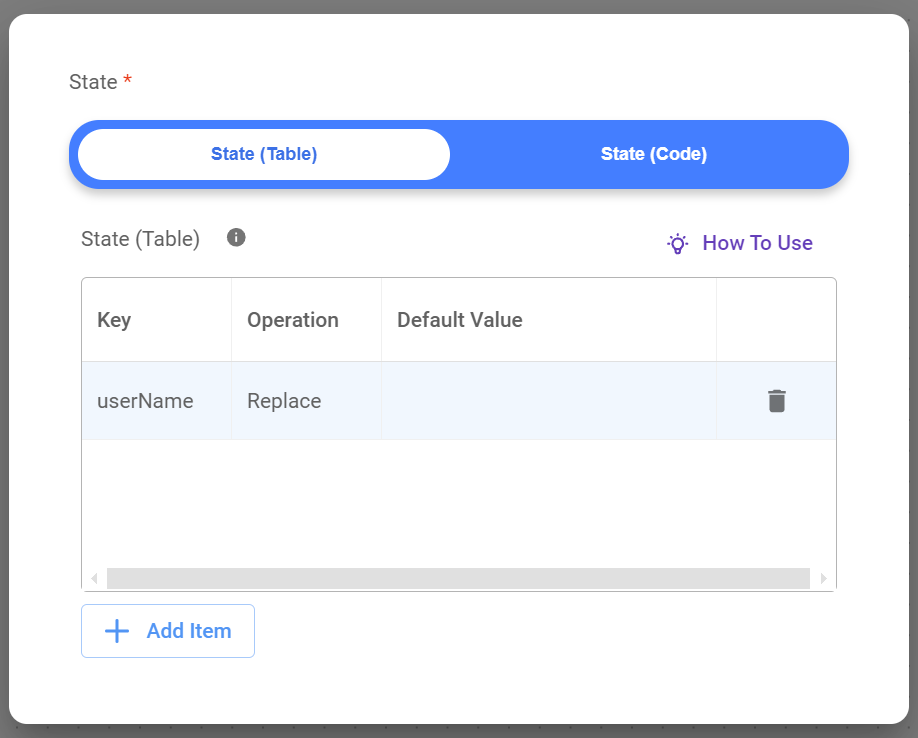

Example using Table

To define a custom State using the table interface in the State Node, follow these steps:

- Add item: Click the "+ Add Item" button to add rows to the table. Each row represents a key-value pair in your custom State.

- Specify keys: In the "Key" column, enter the name of each key you want to define in your state object. For example, you might have keys like "userName", "userLocation", etc.

- Choose operations: In the "Operation" column, select the desired operation for each key. You have two options:

- Replace: This will replace the existing value of the key with the new value provided by a node. If the new value is null, the existing value will be retained.

- Append: This will append the new value to the existing value of the key. The final value will be an array.

- Set default values: In the "Default Value" column, enter the initial value for each key. This value will be used if no other node provides a value for the key. The default value can be empty or an array.

Example Table

| Key | Operation | Default Value |

|---|---|---|

| userName | Replace | null |

- This table defines one key in the custom State:

userName. - The

userNamekey will use the "Replace" operation, meaning its value will be updated whenever a node provides a new value. - The

userNamekey has a default value of null, indicating that it has no initial value.

{% hint style="info" %} Remember that this table-based approach is an alternative to defining the custom State using JavaScript. Both methods achieve the same result. {% endhint %}

Example using API

{

"question": "hello",

"overrideConfig": {

"stateMemory": [

{

"Key": "userName",

"Operation": "Replace",

"Default Value": "somevalue"

}

]

}

}

Best Practices

{% tabs %} {% tab title="Pro-Tips" %} Plan your custom State structure

Before building your workflow, design the structure of your custom State. A well-organized custom State will make your workflow easier to understand, manage, and debug.

Use meaningful key names

Choose descriptive and consistent key names that clearly indicate the purpose of the data they hold. This will improve the readability of your code and make it easier for others (or you in the future) to understand how the custom State is being used.

Keep custom State minimal

Only store information in the custom State that is essential for the workflow's logic and decision-making.

Consider State persistence

If you need to preserve State across multiple conversation sessions (e.g., for user preferences, order history, etc.), use the Agent Memory Node to store the State in a persistent database. {% endtab %}

{% tab title="Potential Pitfalls" %} Inconsistent State Updates

- Problem: Updating the custom State in multiple nodes without a clear strategy can lead to inconsistencies and unexpected behavior.

- Example

- Agent 1 updates

orderStatusto "Payment Confirmed". - Agent 2, in a different branch, updates

orderStatusto "Order Complete" without checking the previous status.

- Agent 1 updates

- Solution: Use Conditions Nodes to control the flow of the custom State updates and ensure that custom State transitions happen in a logical and consistent manner. {% endtab %} {% endtabs %}

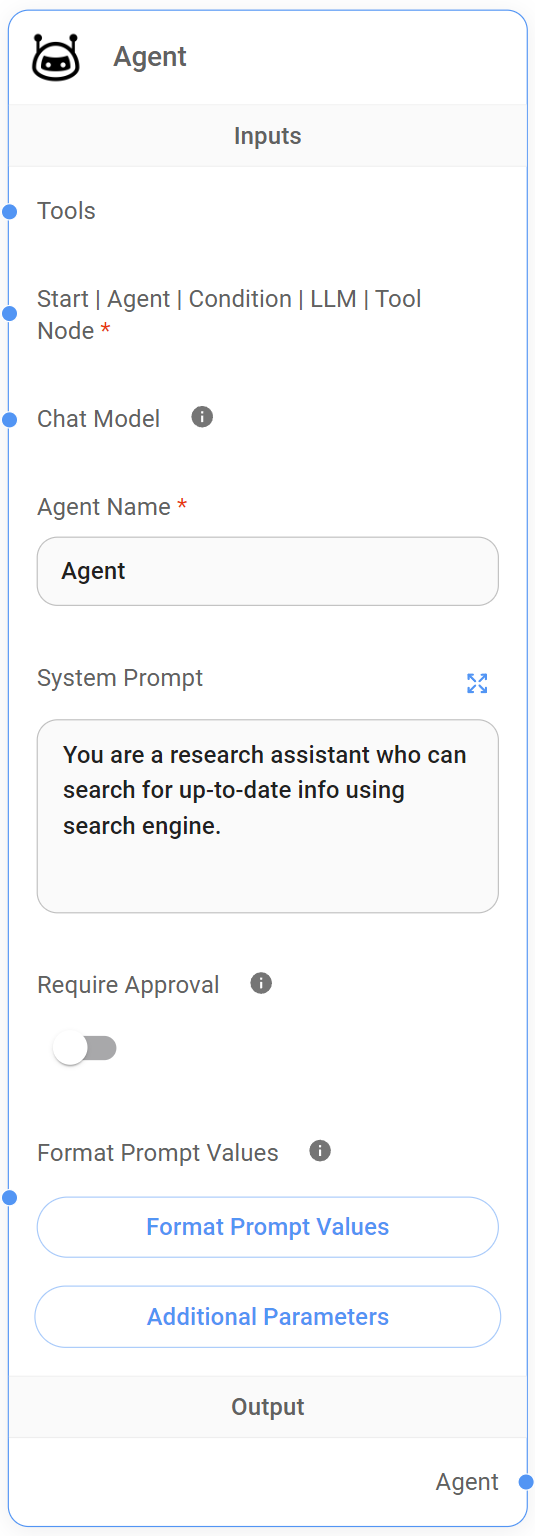

4. Agent Node

The Agent Node is a core component of the Sequential Agent architecture. It acts as a decision-maker and orchestrator within our workflow.

Understanding the Agent Node

Upon receiving input from preceding nodes, which always includes the full conversation history state.messages and any custom State at that point in the execution, the Agent Node uses its defined "persona", established by the System Prompt, to determine if external tools are necessary to fulfill the user's request.

- If tools are required, the Agent Node autonomously selects and executes the appropriate tool. This execution can be automatic or, for sensitive tasks, require human approval (HITL) before proceeding. Once the tool completes its operation, the Agent Node receives the results, processes them using the designated Chat Model (LLM), and generates a comprehensive response.

- In cases where no tools are needed, the Agent Node directly leverages the Chat Model (LLM) to formulate a response based on the current conversation context.

Inputs

| Required | Description | |

|---|---|---|

| External Tools | No | Provides the Agent Node with access to a suite of external tools, enabling it to perform actions and retrieve information. |

| Chat Model | No | Add a new Chat Model to overwrite the default Chat Model (LLM) of the workflow. Only compatible with models that are capable of function calling. |

| Start Node | Yes | Receives the initial user input, along with the custom State (if set up) and the rest of the default state.messages array from the Start Node. |

| Condition Node | Yes | Receives input from a preceding Condition Node, enabling the Agent Node to take actions or guide the conversation based on the outcome of the Condition Node's evaluation. |

| Condition Agent Node | Yes | Receives input from a preceding Condition Agent Node, enabling the Agent Node to take actions or guide the conversation based on the outcome of the Condition Agent Node's evaluation. |

| Agent Node | Yes | Receives input from a preceding Agent Node, enabling chained agent actions and maintaining conversational context |

| LLM Node | Yes | Receives the output from LLM Node, enabling the Agent Node to process the LLM's response. |

| Tool Node | Yes | Receives the output from a Tool Node, enabling the Agent Node to process and integrate tool's outputs into its response. |

{% hint style="info" %} The Agent Node requires at least one connection from the following nodes: Start Node, Agent Node, Condition Node, Condition Agent Node, LLM Node, or Tool Node. {% endhint %}

Outputs

The Agent Node can connect to the following nodes as outputs:

- Agent Node: Passes control to a subsequent Agent Node, enabling the chaining of multiple agent actions within a workflow. This allows for more complex conversational flows and task orchestration.

- LLM Node: Passes the agent's output to an LLM Node, enabling further language processing, response generation, or decision-making based on the agent's actions and insights.

- Condition Agent Node: Directs the flow to a Condition Agent Node. This node evaluates the Agent Node's output and its predefined conditions to determine the appropriate next step in the workflow.

- Condition Node: Similar to the Condition Agent Node, the Condition Node uses predefined conditions to assess the Agent Node's output, directing the flow along different branches based on the outcome.

- End Node: Concludes the conversation flow.

- Loop Node: Redirects the flow back to a previous node, enabling iterative or cyclical processes within the workflow. This is useful for tasks that require multiple steps or involve refining results based on previous interactions. For example, you might loop back to an earlier Agent Node or LLM Node to gather additional information or refine the conversation flow based on the current Agent Node's output.

Node Setup

| Required | Description | |

|---|---|---|

| Agent Name | Yes | Add a descriptive name to the Agent Node to enhance workflow readability and easily target it back when using loops within the workflow. |

| System Prompt | No | Defines the agent's 'persona' and guides its behavior. For example, "You are a customer service agent specializing in technical support [...]." |

| Require Approval | No | Activates the Human-in-the-loop (HITL) feature. If set to 'True,' the Agent Node will request human approval before executing any tool. This is particularly valuable for sensitive operations or when human oversight is desired. Defaults to 'False,' allowing the Agent Node to execute tools autonomously. |

Additional Parameters

| Required | Description | |

|---|---|---|

| Human Prompt | No | This prompt is appended to the state.messages array as a human message. It allows us to inject a human-like message into the conversation flow after the Agent Node has processed its input and before the next node receives the Agent Node's output. |

| Approval Prompt | No | A customizable prompt presented to the human reviewer when the HITL feature is active. This prompt provides context about the tool execution, including the tool's name and purpose. The variable {tools} within the prompt will be dynamically replaced with the actual list of tools suggested by the agent, ensuring the human reviewer has all necessary information to make an informed decision. |

| Approve Button Text | No | Customizes the text displayed on the button for approving tool execution in the HITL interface. This allows for tailoring the language to the specific context and ensuring clarity for the human reviewer. |

| Reject Button Text | No | Customizes the text displayed on the button for rejecting tool execution in the HITL interface. Like the Approve Button Text, this customization enhances clarity and provides a clear action for the human reviewer to take if they deem the tool execution unnecessary or potentially harmful. |

| Update State | No | Provides a mechanism to modify the shared custom State object within the workflow. This is useful for storing information gathered by the agent or influencing the behavior of subsequent nodes. |

| Max Iteration | No | Limits the number of iterations an Agent Node can make within a single workflow execution. |

Best Practices

{% tabs %} {% tab title="Pro Tips" %} Clear system prompt

Craft a concise and unambiguous System Prompt that accurately reflects the agent's role and capabilities. This guides the agent's decision-making and ensures it acts within its defined scope.

Strategic tool selection

Choose and configure the tools available to the Agent Node, ensuring they align with the agent's purpose and the overall goals of the workflow.

HITL for sensitive tasks

Utilize the 'Require Approval' option for tasks involving sensitive data, requiring human judgment, or carrying a risk of unintended consequences.

Leverage custom State updates

Update the custom State object strategically to store gathered information or influence the behavior of downstream nodes. {% endtab %}

{% tab title="Potential Pitfalls" %} Agent inaction due to tool overload

- Problem: When an Agent Node has access to a large number of tools within a single workflow execution, it might struggle to decide which tool is the most appropriate to use, even when a tool is clearly necessary. This can lead to the agent failing to call any tool at all, resulting in incomplete or inaccurate responses.

- Example: Imagine a customer support agent designed to handle a wide range of inquiries. You've equipped it with tools for order tracking, billing information, product returns, technical support, and more. A user asks, "What's the status of my order?" but the agent, overwhelmed by the number of potential tools, responds with a generic answer like, "I can help you with that. What's your order number?" without actually using the order tracking tool.

- Solution

- Refine system prompts: Provide clearer instructions and examples within the Agent Node's System Prompt to guide it towards the correct tool selection. If needed, emphasize the specific capabilities of each tool and the situations in which they should be used.

- Limit tool choices per node: If possible, break down complex workflows into smaller, more manageable segments, each with a more focused set of tools. This can help reduce the cognitive load on the agent and improve its tool-selection accuracy.

Overlooking HITL for sensitive tasks

- Problem: Failing to utilize the Agent Node's "Require Approval" (HITL) feature for tasks involving sensitive information, critical decisions, or actions with potential real-world consequences can lead to unintended outcomes or damage to user trust.

- Example: Your travel booking agent has access to a user's payment information and can automatically book flights and hotels. Without HITL, a misinterpretation of user intent or an error in the agent's understanding could result in an incorrect booking or unauthorized use of the user's payment details.

- Solution

- Identify sensitive actions: Analyze your workflow and identify any actions that involve accessing or processing sensitive data (e.g., payment info, personal details).

- Implement "Require Approval": For these sensitive actions, enable the "Require Approval" option within the Agent Node. This ensures that a human reviews the agent's proposed action and the relevant context before any sensitive data is accessed or any irreversible action is taken.

- Design clear approval prompts: Provide clear and concise prompts for human reviewers, summarizing the agent's intent, the proposed action, and the relevant information needed for the reviewer to make an informed decision.

Unclear or incomplete system prompt

- Problem: The System Prompt provided to the Agent Node lacks the necessary specificity and context to guide the agent effectively in carrying out its intended tasks. A vague or overly general prompt can lead to irrelevant responses, difficulty in understanding user intent, and an inability to leverage tools or data appropriately.

- Example: You're building a travel booking agent, and your System Prompt simply states "You are a helpful AI assistant." This lacks the specific instructions and context needed for the agent to effectively guide users through flight searches, hotel bookings, and itinerary planning.

- Solution: Craft a detailed and context-aware System Prompt:

{% code overflow="wrap" %}

You are a travel booking agent. Your primary goal is to assist users in planning and booking their trips.

- Guide them through searching for flights, finding accommodations, and exploring destinations.

- Be polite, patient, and offer travel recommendations based on their preferences.

- Utilize available tools to access flight data, hotel availability, and destination information.

{% endcode %} {% endtab %} {% endtabs %}

5. LLM Node

Like the Agent Node, the LLM Node is a core component of the Sequential Agent architecture. Both nodes utilize the same Chat Models (LLMs) by default, providing the same basic language processing capabilities, but the LLM Node distinguishes itself in these key areas.

Key advantages of the LLM Node

While a detailed comparison between the LLM Node and the Agent Node is available in this section, here's a brief overview of the LLM Node's key advantages:

- Structured data: The LLM Node provides a dedicated feature to define a JSON schema for its output. This makes it exceptionally easy to extract structured information from the LLM's responses and pass that data to consequent nodes in the workflow. The Agent Node does not have this built-in JSON schema feature

- HITL: While both nodes support HITL for tool execution, the LLM Node defers this control to the Tool Node itself, providing more flexibility in workflow design.

Inputs

| Required | Description | |

|---|---|---|

| Chat Model | No | Add a new Chat Model to overwrite the default Chat Model (LLM) of the workflow. Only compatible with models that are capable of function calling. |

| Start Node | Yes | Receives the initial user input, along with the custom State (if set up) and the rest of the default state.messages array from the Start Node. |

| Agent Node | Yes | Receives output from an Agent Node, which may include tool execution results or agent-generated responses. |

| Condition Node | Yes | Receives input from a preceding Condition Node, enabling the LLM Node to take actions or guide the conversation based on the outcome of the Condition Node's evaluation. |

| Condition Agent Node | Yes | Receives input from a preceding Condition Agent Node, enabling the LLM Node to take actions or guide the conversation based on the outcome of the Condition Agent Node's evaluation. |

| LLM Node | Yes | Receives output from another LLM Node, enabling chained reasoning or information processing across multiple LLM Nodes. |

| Tool Node | Yes | Receives output from a Tool Node, providing the results of tool execution for further processing or response generation. |

{% hint style="info" %} The LLM Node requires at least one connection from the following nodes: Start Node, Agent Node, Condition Node, Condition Agent Node, LLM Node, or Tool Node. {% endhint %}

Node Setup

| Required | Description | |

|---|---|---|

| LLM Node Name | Yes | Add a descriptive name to the LLM Node to enhance workflow readability and easily target it back when using loops within the workflow. |

Outputs

The LLM Node can connect to the following nodes as outputs:

- Agent Node: Passes the LLM's output to an Agent Node, which can then use the information to decide on actions, execute tools, or guide the conversation flow.

- LLM Node: Passes the output to a subsequent LLM Node, enabling chaining of multiple LLM operations. This is useful for tasks like refining text generation, performing multiple analyses, or breaking down complex language processing into stages.

- Tool Node: Passes the output to a Tool Node, enabling the execution of a specific tool based on the LLM Node's instructions.

- Condition Agent Node: Directs the flow to a Condition Agent Node. This node evaluates the LLM Node's output and its predefined conditions to determine the appropriate next step in the workflow.

- Condition Node: Similar to the Condition Agent Node, the Condition Node uses predefined conditions to assess the LLM Node's output, directing the flow along different branches based on the outcome.

- End Node: Concludes the conversation flow.

- Loop Node: Redirects the flow back to a previous node, enabling iterative or cyclical processes within the workflow. This could be used to refine the LLM's output over multiple iterations.

Additional Parameters

| Required | Description | |

|---|---|---|

| System Prompt | No | Defines the agent's 'persona' and guides its behavior. For example, "You are a customer service agent specializing in technical support [...]." |

| Human Prompt | No | This prompt is appended to the state.messages array as a human message. It allows us to inject a human-like message into the conversation flow after the LLM Node has processed its input and before the next node receives the LLM Node's output. |

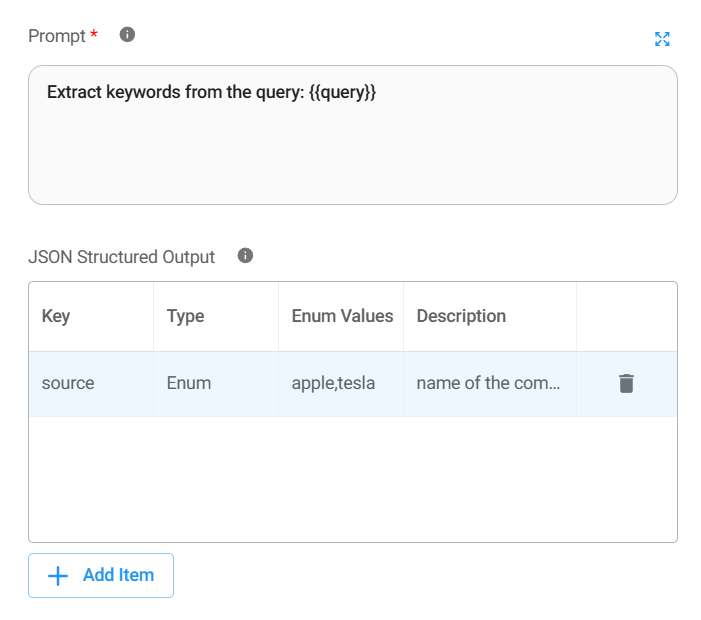

| JSON Structured Output | No | To instruct the LLM (Chat Model) to provide the output in JSON structure schema (Key, Type, Enum Values, Description). |

| Update State | No | Provides a mechanism to modify the shared custom State object within the workflow. This is useful for storing information gathered by the LLM Node or influencing the behavior of subsequent nodes. |

Best Practices

{% tabs %} {% tab title="Pro Tips" %} Clear system prompt

Craft a concise and unambiguous System Prompt that accurately reflects the LLM Node's role and capabilities. This guides the LLM Node's decision-making and ensures it acts within its defined scope.

Optimize for structured output

Keep your JSON schemas as straightforward as possible, focusing on the essential data elements. Only enable JSON Structured Output when you need to extract specific data points from the LLM's response or when downstream nodes require JSON input.

Strategic tool selection

Choose and configure the tools available to the LLM Node (via the Tool Node), ensuring they align with the application purpose and the overall goals of the workflow.

HITL for sensitive tasks

Utilize the 'Require Approval' option for tasks involving sensitive data, requiring human judgment, or carrying a risk of unintended consequences.

Leverage State updates

Update the custom State object strategically to store gathered information or influence the behavior of downstream nodes. {% endtab %}

{% tab title="Potential Pitfalls" %} Unintentional tool execution due to Incorrect HITL setup

- Problem: While the LLM Node can trigger Tool Nodes, it relies on the Tool Node's configuration for Human-in-the-Loop (HITL) approval. Failing to properly configure HITL for sensitive actions can lead to tools being executed without human review, potentially causing unintended consequences.

- Example: Your LLM Node is designed to interact with a tool that makes changes to user data. You intend to have a human review these changes before execution, but the connected Tool Node's "Require Approval" option is not enabled. This could result in the tool automatically modifying user data based solely on the LLM's output, without any human oversight.

- Solution

- Double-Check tool node settings: Always ensure that the "Require Approval" option is enabled within the settings of any Tool Node that handles sensitive actions.

- Test HITL thoroughly: Before deploying your workflow, test the HITL process to ensure that human review steps are triggered as expected and that the approval/rejection mechanism functions correctly.

Overuse or misunderstanding of JSON structured output

- Problem: While the LLM Node's JSON Structured Output feature is powerful, misusing it or not fully understanding its implications can lead to data errors.

- Example: You define a complex JSON schema for the LLM Node's output, even though the downstream tasks only require a simple text response. This adds unnecessary complexity and makes your workflow harder to understand and maintain. Additionally, if the LLM's output doesn't conform to the defined schema, it can cause errors in subsequent nodes.

- Solution

- Use JSON output strategically: Only enable JSON Structured Output when you have a clear need to extract specific data points from the LLM's response or when the downstream Tool Nodes require JSON input.

- Keep schemas simple: Design your JSON schemas to be as simple and concise as possible, focusing only on the data elements that are absolutely necessary for the task. {% endtab %} {% endtabs %}

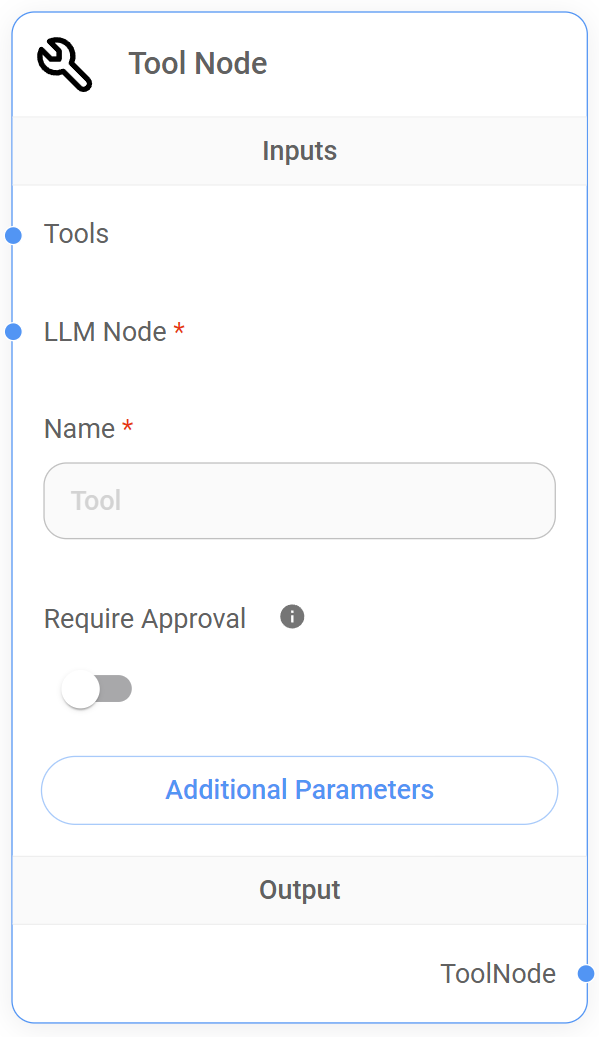

6. Tool Node

The Tool Node is a valuable component of Aimicromind's Sequential Agent system, enabling the integration and execution of external tools within conversational workflows. It acts as a bridge between the language-based processing of LLM Nodes and the specialized functionalities of external tools, APIs, or services.

Understanding the Tool Node

The Tool Node's primary function is to execute external tools based on instructions received from an LLM Node and to provide flexibility for Human-in-the-Loop (HITL) intervention in the tool execution process.

Here's a step-by-step explanation of how it works

- Tool Call Reception: The Tool Node receives input from an LLM Node. If the LLM's output contains the

tool_callsproperty, the Tool Node will proceed with tool execution. - Execution: The Tool Node directly passes the LLM's

tool_calls(which include the tool name and any required parameters) to the specified external tool. Otherwise, the Tool Node does not execute any tools in that particular workflow execution. It does not process or interpret the LLM's output in any way. - Human-in-the-Loop (HITL): The Tool Node allows for optional HITL, enabling human review and approval or rejection of tool execution before it occurs.

- Output passing: After the tool execution (either automatic or after HITL approval), the Tool Node receives the tool's output and passes it to the next node in the workflow. If the Tool Node's output is not connected to a subsequent node, the tool's output is returned to the original LLM Node for further processing.

Inputs

| Required | Description | |

|---|---|---|

| LLM Node | Yes | Receives the output from an LLM Node, which may or may not contain tool_calls property. If it is present, the Tool Node will use them to execute the specified tool. |

| External Tools | No | Provides the Tool Node with access to a suite of external tools, enabling it to perform actions and retrieve information. |

Node Setup

| Required | Description | |

|---|---|---|

| Tool Node Name | Yes | Add a descriptive name to the Tool Node to enhance workflow readability. |

| Require Approval (HITL) | No | Activates the Human-in-the-loop (HITL) feature. If set to 'True,' the Tool Node will request human approval before executing any tool. This is particularly valuable for sensitive operations or when human oversight is desired. Defaults to 'False,' allowing the Tool Node to execute tools autonomously. |

Outputs

The Tool Node can connect to the following nodes as outputs:

- Agent Node: Passes the Tool Node's output (the result of the executed tool) to an Agent Node. The Agent Node can then use this information to decide on actions, execute further tools, or guide the conversation flow.

- LLM Node: Passes the output to a subsequent LLM Node. This enables the integration of tool results into the LLM's processing, allowing for further analysis or refinement of the conversation flow based on the tool's output.

- Condition Agent Node: Directs the flow to a Condition tool Node. This node evaluates the Tool Node's output and its predefined conditions to determine the appropriate next step in the workflow.

- Condition Node: Similar to the Condition Agent Node, the Condition Node uses predefined conditions to assess the Tool Node's output, directing the flow along different branches based on the outcome.

- End Node: Concludes the conversation flow.